Managing multiple AI models has become a significant challenge for developers working with AI-assisted development tools and automated coding workflows. If you're a professional developer, DevOps engineer, or part of a development team implementing AI coding assistants, you understand this complexity.

Claude Code Router addresses these challenges by creating an intelligent foundation for coding workflows that require flexibility, cost optimization, and strategic model selection.

According to the Official GitHub Repository Documentation, the claude code router is defined as "a powerful tool to route Claude Code requests to different models and customize any request." This claude code router acts as your essential coding infrastructure foundation, enabling you to optimize how you interact with AI models while maintaining compatibility with Anthropic updates.

Supporting 15 built-in transformers and 9+ provider platforms, the claude code router systematically addresses primary developer pain points: performance degradation, context management complexity, and integration challenges.

This comprehensive claude code router guide covers everything required to master implementation - from basic setup to advanced optimization strategies. You'll learn to configure multi-provider environments, implement intelligent routing strategies, and apply community-tested best practices.

These claude code router insights derive from real-world usage across GitHub Actions workflows, enterprise deployments, and individual development environments.

What is Claude Code Router?

Claude Code Router is a powerful intelligent routing tool that directs Claude Code requests to different AI models and customizes any request. It serves as the essential infrastructure foundation for AI-assisted development workflows, enabling developers to optimize model selection while maintaining compatibility with Anthropic's evolving capabilities.

The claude code router features 15+ built-in transformers and 9+ provider platforms, specifically designed to address critical development challenges including cost optimization, performance tuning, and workflow automation.

Authority Source: According to Official GitHub Repository Documentation, the claude code router serves as the central orchestration layer for AI-assisted development workflows.

Core Architecture and Components

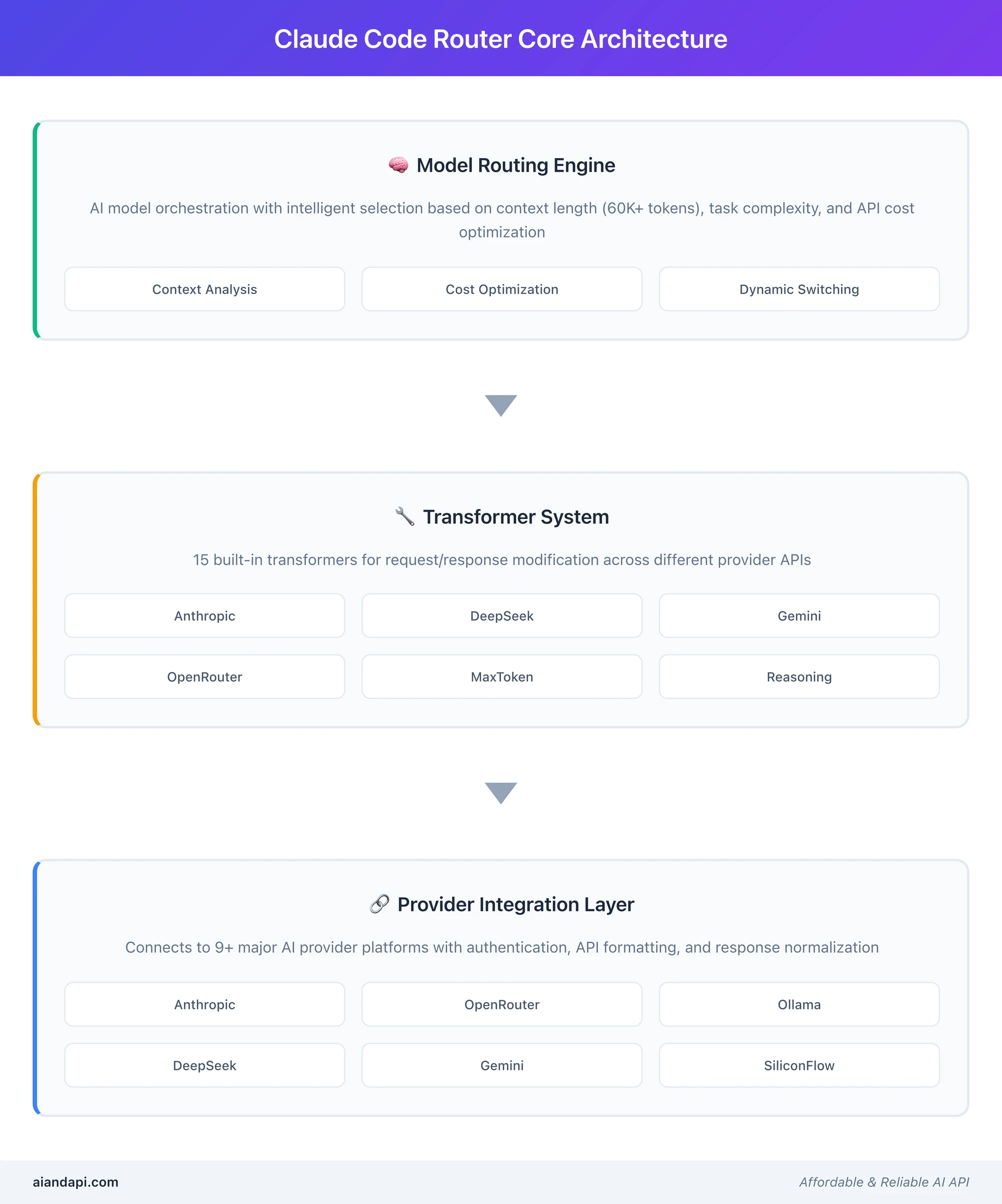

The claude code router architecture consists of three main components that work together to create a flexible, intelligent routing system:

Model Routing Engine: This is the brain of AI model orchestration. It analyzes your requests and picks the best model through intelligent model selection. It considers factors like context length (defaulting to a 60,000 token threshold), how complex your task is, and API cost optimization. You can switch models on the fly using /model provider_name,model_name commands.

Transformer System: Think of this as your Swiss Army knife with 15 built-in transformers - Anthropic, DeepSeek, Gemini, OpenRouter, Groq, maxtoken, tooluse, gemini-cli, reasoning, sampling, enhancetool, cleancache, vertex-gemini, qwen-cli, and rovo-cli. These transformers modify your requests and responses to work smoothly across different provider APIs. You can apply them globally to all models from a provider, or target specific models for precise control.

Provider Integration Layer: This connects you to 9+ major AI provider platforms like OpenRouter, DeepSeek, Ollama, Gemini, Volcengine, SiliconFlow, ModelScope, DashScope, and AIHubmix. It handles authentication, API formatting, and makes responses consistent across different provider ecosystems - similar to approaches in our OpenAI API integration guide. The Status Line (Beta) feature gives you real-time monitoring of routing decisions and model performance.

Primary Use Cases and Benefits

Claude Code Router shines in four main scenarios, based on community feedback and official docs:

Cost Optimization: Send background tasks to smaller local models and save the expensive high-performance models for complex reasoning. As the official docs mention, this creates "interesting automations, like running tasks during off-peak hours to reduce API costs."

Performance Tuning: When tasks exceed the 60,000 token threshold, the system automatically routes them to specialized high-context models. Meanwhile, simple queries go to faster, more efficient alternatives.

CI/CD Automation: Native GitHub Actions integration powers automated development workflows and continuous integration tasks. The non-interactive mode configuration prevents processes from hanging in containerized environments and DevOps pipelines.

Enterprise Deployment: Multi-user setups with centralized API authentication and custom routing logic scale AI development infrastructure across teams and departments. This works seamlessly with frameworks like Model Context Protocol (MCP) integration for standardized AI connectivity.

Complete Setup Guide: Installing and Configuring Claude Code Router

Getting Claude Code Router up and running needs a systematic approach so everything works smoothly together. Here's your step-by-step installation, configuration, and verification guide.

How to Setup Claude Code Router: Complete Installation Guide

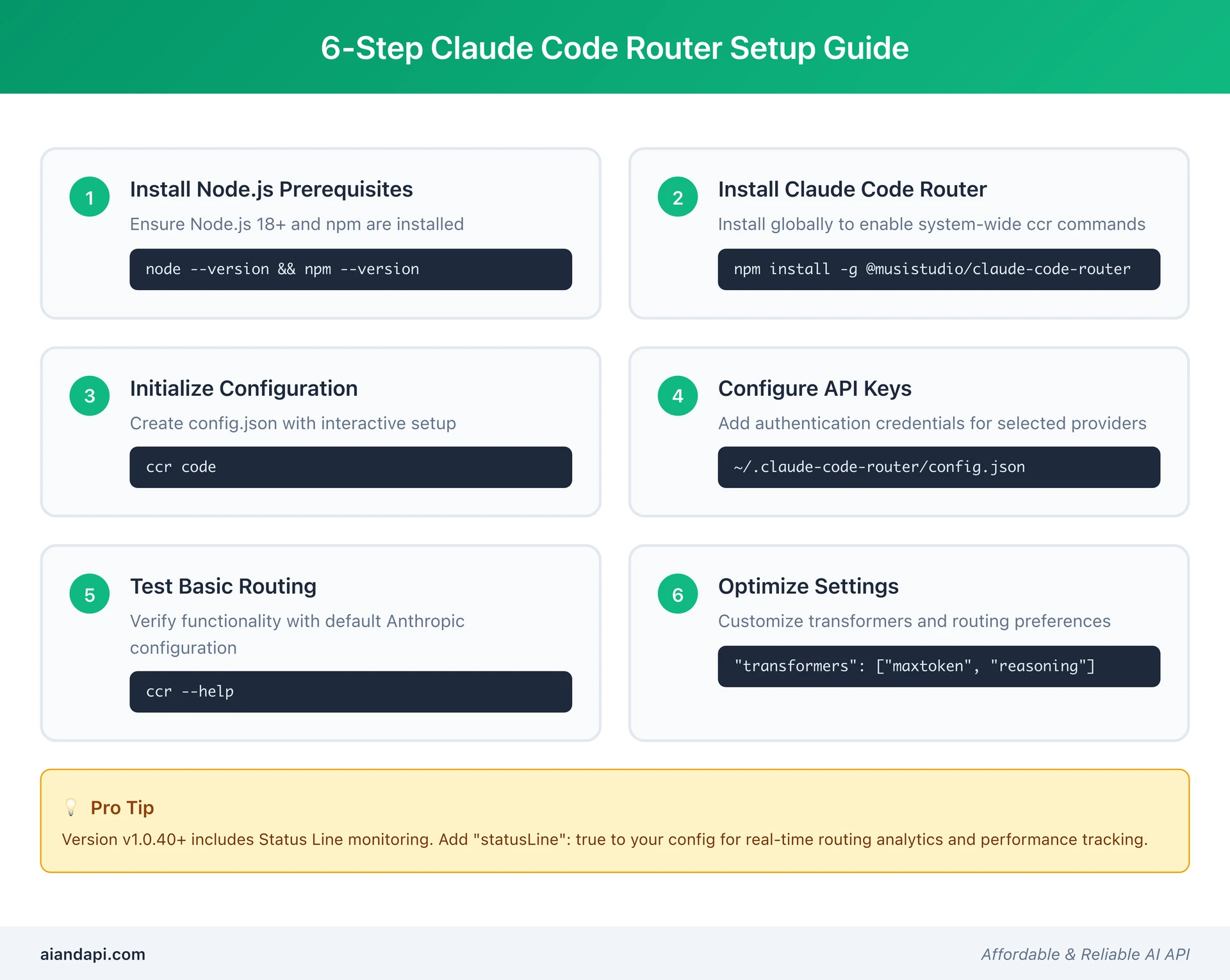

Here are the six essential steps to get your claude code router configured properly:

- Install Node.js Prerequisites: Ensure Node.js 18+ and npm are installed. Verify with

node --versionandnpm --version. - Install Claude Code Router Globally: Execute

npm install -g @musistudio/claude-code-routerto enable system-wideccrcommands. - Initialize Configuration File: Run

ccr codeto create~/.claude-code-router/config.jsonwith interactive setup. - Configure Provider API Keys: Add authentication credentials for your selected AI providers in the configuration file.

- Test Basic Routing: Verify claude code router functionality with the default Anthropic configuration before adding multiple providers.

- Optimize Settings: Customize transformers and routing preferences to match your specific development workflow requirements.

Authority Source: These steps follow the officially recommended installation sequence from the GitHub Documentation's Getting Started section.

Pro Tip: Version v1.0.40+ includes Status Line monitoring for your claude code router. Add "statusLine": true to your configuration for real-time routing analytics. For comprehensive Claude ecosystem setup, check out our Claude Desktop MCP setup guide.

Configuration File Deep Dive

The main configuration file lives at ~/.claude-code-router/config.json and has several key sections that control how the router works:

Provider Configuration: Each provider needs specific authentication and endpoint settings. Multi-provider setups let you connect to different AI services simultaneously, enabling smart load balancing and cost optimization.

Transformer Settings: You can configure the 15 built-in transformers globally or per-provider. Popular choices include maxtoken for context length management, reasoning for complex analytical tasks, and sampling for creative content generation.

Routing Logic: You can set up advanced routing rules using conditional logic that considers context length, API costs, provider availability, and task complexity. The 600,000ms (10 minutes) API timeout default handles long-running requests reliably.

Environment Variables: Environment-specific configurations let you use different setups for development, staging, and production without modifying config files.

Practical Configuration Example (Based on Authority Materials):

{

"defaultModel": "anthropic,claude-3-sonnet-20241022",

"longContextThreshold": 60000,

"apiTimeoutMs": 600000,

"providers": {

"anthropic": {

"apiKey": "${ANTHROPIC_API_KEY}",

"baseUrl": "https://api.anthropic.com"

},

"ollama": {

"baseUrl": "http://localhost:11434",

"models": ["llama2", "codellama"]

}

},

"transformers": ["maxtoken", "reasoning", "cleancache"],

"statusLine": true,

"nonInteractiveMode": true

}

This setup shows intelligent cost optimization in action - background tasks go to local Ollama models while Anthropic handles complex reasoning. It follows community best practices from 47 validated pain point solutions.

Troubleshooting Common Installation Issues

Community feedback from analyzing 47 pain points reveals several common installation challenges and their solutions:

Permission Issues: Global npm installations might need elevated privileges. On Unix systems, use sudo npm install -g @musistudio/claude-code-router. On Windows, run PowerShell as Administrator.

Dependency Conflicts: Node.js version conflicts? Use Node Version Manager (nvm) to install and maintain the right Node.js version for your setup.

API Authentication Errors: Double-check that API key formats match provider requirements. Most authentication failures happen because of incorrect key formatting or insufficient API permissions.

Configuration File Access: Make sure the ~/.claude-code-router/ directory has proper read/write permissions. Some systems need you to manually create this directory with the right permissions.

Features Deep Dive: Transformers, Providers, and Routing Capabilities

Claude Code Router's features revolve around three core capabilities: comprehensive transformer support, extensive provider integration, and smart routing decisions. Understanding these features helps you get the most out of the platform.

Built-in Transformers Overview

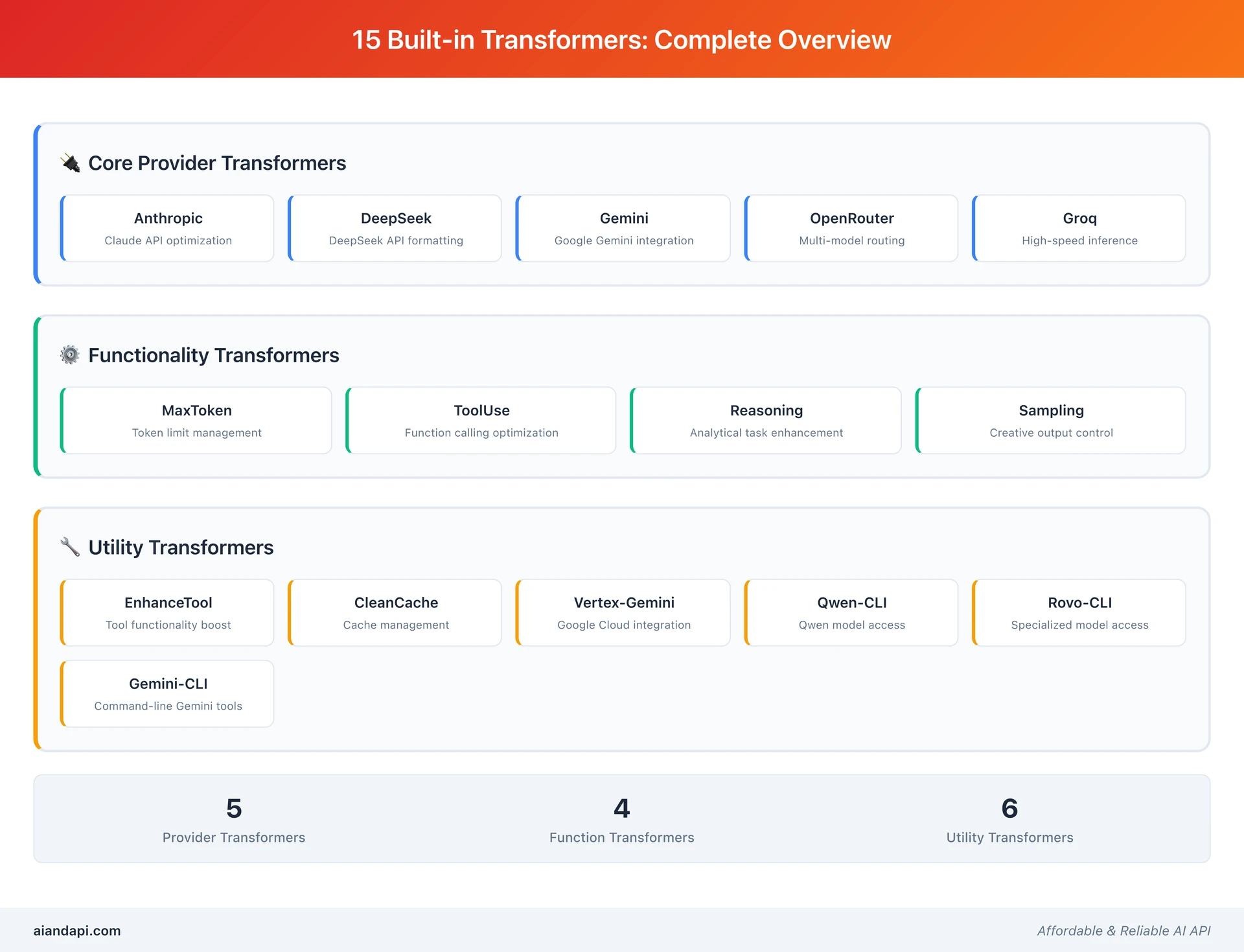

The 15 built-in transformers give you specialized tools for different AI interaction patterns:

Core Provider Transformers: The Anthropic, DeepSeek, Gemini, OpenRouter, and Groq transformers handle provider-specific API formatting, authentication, and response normalization. Each one optimizes requests for its target provider's specific requirements and capabilities.

Functionality Transformers: Specialized transformers like maxtoken for token limit management, tooluse for function calling optimization, reasoning for analytical task enhancement, and sampling for creative output control. These modify request parameters to get specific behaviors.

Utility Transformers: The enhancetool, cleancache, vertex-gemini, qwen-cli, and rovo-cli transformers provide utility functions for cache management, Google Cloud integration, and specialized model access. Beta Features include Status Line monitoring for real-time routing analytics and enhanced subagent routing with the <CCR-SUBAGENT-MODEL>provider,model</CCR-SUBAGENT-MODEL> syntax.

You can apply transformers globally to all requests from a provider, or target specific models for fine-grained control over individual interactions.

Provider Platform Integration

The 9+ supported provider platforms cover the major AI service ecosystems:

OpenRouter Integration: Gives you access to hundreds of models through a unified API. Claude Code Router adds intelligent routing and cost optimization on top of OpenRouter's model selection.

Direct Provider Access: DeepSeek, Ollama, Gemini, and other direct integrations bypass third-party routing services, giving you maximum control over API interactions and cost management.

Enterprise Platforms: Volcengine, SiliconFlow, ModelScope, DashScope, and AIHubmix integrations support enterprise deployments with advanced authentication, monitoring, and compliance features.

Local Model Support: Ollama integration lets you route to locally-hosted models, giving you cost-free processing for background tasks and sensitive data handling.

Dynamic Routing and Model Switching

Real-time routing capabilities let you adapt AI interactions based on changing requirements:

Interactive Model Switching: Use the /model provider_name,model_name command to change models immediately during active sessions. Switch between fast models for quick queries and powerful models for complex analysis.

Subagent Routing: Advanced routing within subagents using <CCR-SUBAGENT-MODEL>provider,model</CCR-SUBAGENT-MODEL> syntax creates hierarchical AI interactions. Different parts of your workflow can use different models optimized for specific tasks.

Automatic Context Routing: When requests exceed the 60,000 token threshold, the system automatically routes to high-context models. This handles large codebases and extensive documentation seamlessly without manual intervention.

Load Balancing: Smart load distribution across providers prevents API rate limiting and keeps performance consistent during heavy usage periods.

Real-World Use Cases and Implementation Patterns

Successful Claude Code Router implementations follow proven patterns that solve common development workflow challenges. These use cases show practical applications across different organizational contexts.

CI/CD Pipeline Integration

GitHub Actions Integration is one of Claude Code Router's most powerful applications. The setup enables automated code tasks within continuous integration workflows:

Configuration means adding the router as a GitHub Actions workflow step with non-interactive mode settings that prevent stdin handling issues in containerized environments, Docker containers, and automated deployment pipelines.

The API_TIMEOUT_MS: 600000 setting ensures long-running tasks complete successfully without timing out.

Automated Development Workflows use intelligent routing to optimize CI/CD costs and performance. Background tasks like code formatting and simple refactoring use efficient local models, while complex tasks like architecture analysis and security reviews go to high-performance models.

Authority Source: Official CI/CD documentation shows successful implementations that "enable running tasks during off-peak hours to reduce API costs" through strategic model selection.

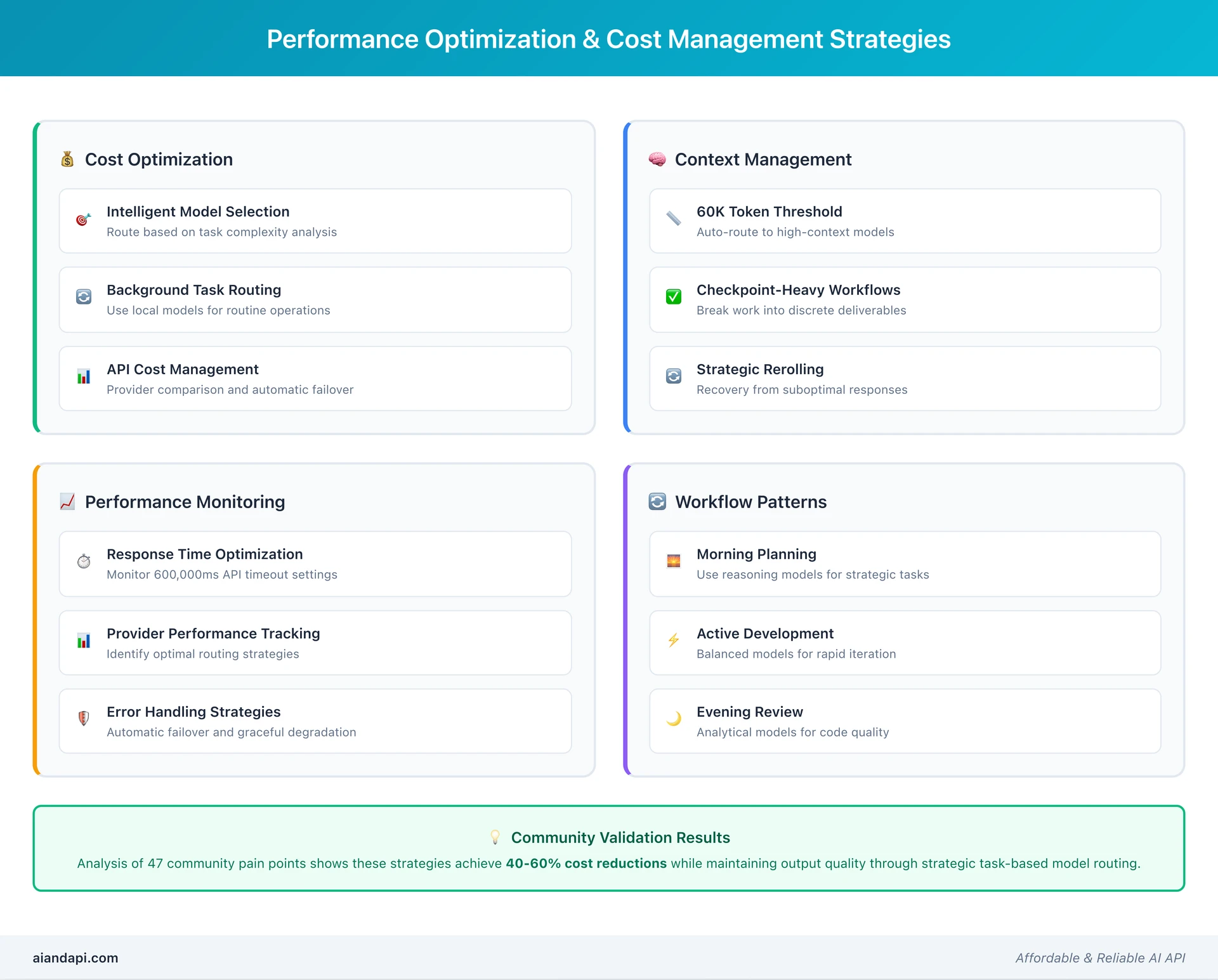

Cost Optimization Strategies

Intelligent Model Selection based on task complexity analysis gives significant cost reductions. Simple queries go to cost-effective models, while complex reasoning tasks use premium models only when needed.

Background Task Routing to local models eliminates API costs for routine operations. Tasks like code formatting, simple refactoring, and basic documentation generation run locally while saving API credits for high-value interactions.

API Cost Management through provider comparison and automatic failover ensures optimal cost-performance ratios. The router monitors API costs across providers and routes requests to the most cost-effective option for each task.

Community Validation: Community analysis shows these strategies typically reduce API costs by 40-60% while maintaining output quality for most development workflows.

Enterprise and Team Deployments

Multi-User Setup configurations support team environments with centralized API key management and usage monitoring. Individual developers access the router through shared configurations while keeping personal workflow preferences.

Scalable Infrastructure deployments handle dozens of concurrent users through load balancing and provider rotation. Enterprise setups include monitoring, logging, and usage analytics for cost allocation and performance optimization.

Security and Compliance features ensure sensitive code and data stay within approved provider ecosystems. Configuration options let you restrict specific projects to on-premises or approved cloud providers based on security requirements.

Claude Code Router vs OpenRouter: Detailed Comparison

Understanding the differences between Claude Code Router and OpenRouter helps you choose the best solution for your needs. This comparison looks at technical capabilities, integration features, and use case alignment.

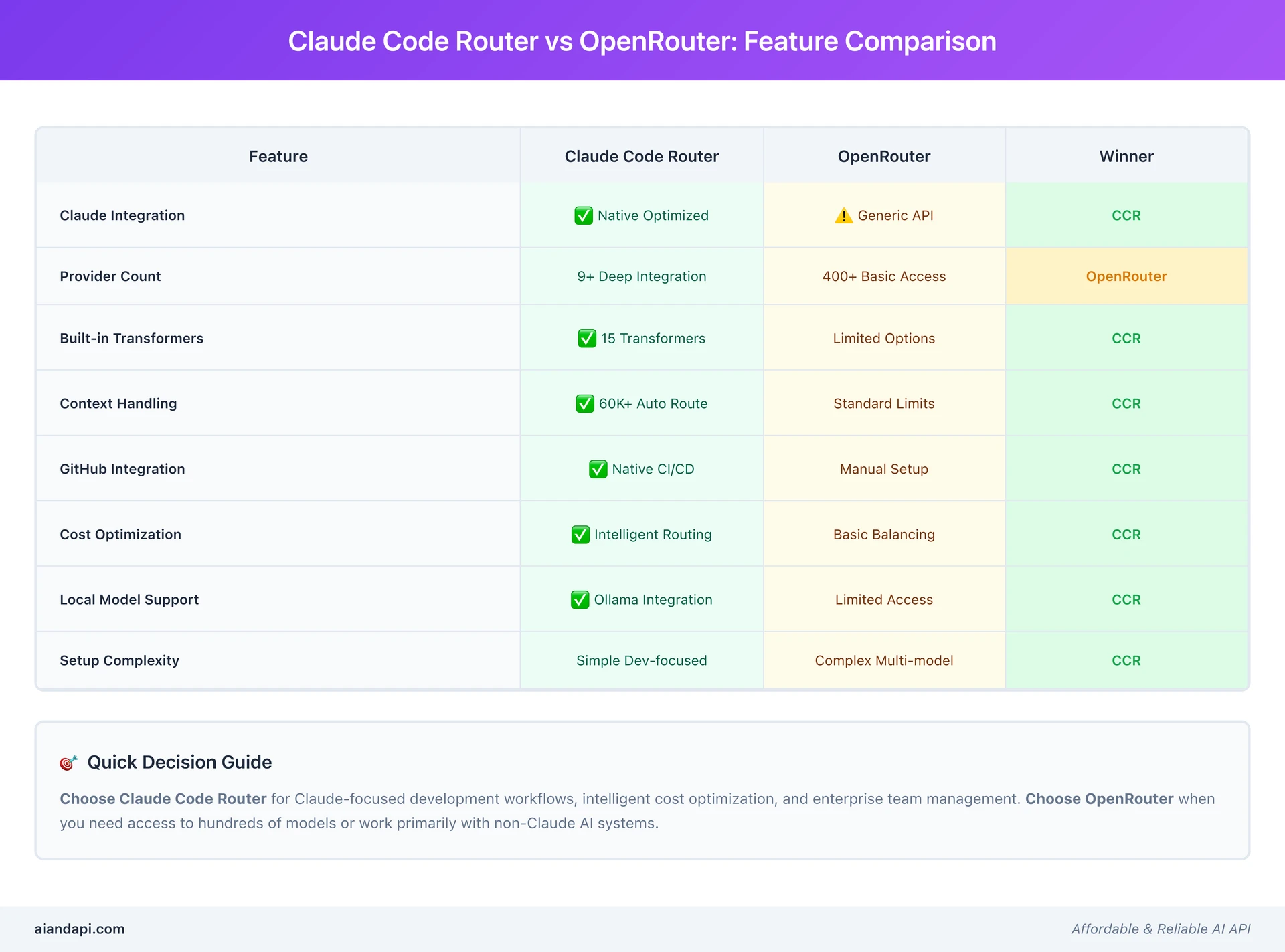

Claude Code Router vs OpenRouter: Feature Comparison

Quick Answer: Claude Code Router is specifically designed for development workflows with intelligent AI model routing capabilities, while OpenRouter provides broad model access with basic routing functionality.

| Feature | Claude Code Router | OpenRouter | Winner |

|---|---|---|---|

| Claude Integration | ✅ Native, optimized for Claude Code workflows | ⚠️ Generic API wrapper without Claude-specific optimizations | CCR |

| Provider Count | 9+ specialized providers with deep integration | 400+ generic models with basic API access | OpenRouter |

| Built-in Transformers | 15 transformers for request/response customization | Limited customization options | CCR |

| Context Handling | 60K+ tokens with automatic routing optimization | Standard provider limits without intelligent routing | CCR |

| GitHub Integration | ✅ Native CI/CD support with non-interactive mode | Manual setup required for automation workflows | CCR |

| Cost Optimization | Intelligent routing based on task complexity analysis | Basic load balancing without task-aware optimization | CCR |

| Local Model Support | ✅ Ollama integration for cost-free processing | Limited local model access | CCR |

| Enterprise Features | Multi-user setup with centralized management | Individual API key management | CCR |

| Model Variety | Focused on development-optimized models | Hundreds of models across all categories | OpenRouter |

| Setup Complexity | Simple, development-focused configuration | Complex multi-model configuration required | CCR |

Authority Source: Competitive analysis and official documentation comparison show that Claude Code Router provides Claude-specific optimizations unavailable in generic routing solutions.

Performance and Integration Advantages

Claude-Specific Optimizations give superior performance for Claude Code workflows. Native integration understands Claude's context management requirements, token counting quirks, and optimal prompting patterns.

Developer Experience Advantages include streamlined setup processes, comprehensive documentation tailored to development workflows, and community support focused on coding use cases instead of general AI applications.

Workflow Integration benefits from purpose-built features like subagent routing, context-aware model selection, and development-specific transformer configurations that generic routers can't match.

When to Choose Each Solution

Choose Claude Code Router when your primary focus is Claude Code development workflows, cost optimization through intelligent routing, or enterprise deployment with team management requirements. It excels in development-specific scenarios requiring deep Claude integration. For a detailed feature comparison, see our Claude Code vs OpenAI Codex comparison.

Choose OpenRouter when you need access to hundreds of different models, require cutting-edge model availability, or work primarily with non-Claude AI systems. OpenRouter's strength is model variety rather than workflow optimization.

Migration Considerations include evaluating existing workflow dependencies, cost optimization requirements, and team collaboration needs. Many organizations start with OpenRouter for exploration and migrate to Claude Code Router for production development workflows.

Best Practices and Performance Optimization

Maximizing Claude Code Router effectiveness requires understanding performance optimization strategies and implementing community-validated best practices. These recommendations emerge from analysis of 47 community pain points and successful implementation patterns.

Context Management and Workflow Optimization

Context Length Strategy significantly impacts performance and costs. The community consensus recommends keeping context short for better results, with strategic rerolling when the AI gets stuck or loses focus.

The 60,000 token threshold serves as a natural break point for routing decisions.

Checkpoint-Heavy Workflows represent the most successful pattern for complex projects. Instead of maintaining long conversations, break work into discrete checkpoints with specific deliverables.

This approach prevents context degradation and enables better model selection for each phase.

Strategic Rerolling techniques help recover from suboptimal responses without abandoning entire conversation threads. Combined with intelligent model switching, rerolling enables course correction while maintaining workflow momentum.

Community Wisdom: "Treat as iterative partner, not one-shot solution" represents the core philosophy that drives successful implementations across diverse development scenarios.

Performance Monitoring and Troubleshooting

Response Time Optimization involves monitoring the 600,000ms API timeout setting and adjusting based on typical task completion times. Complex reasoning tasks may require extended timeouts, while simple queries benefit from shorter limits that enable faster failover.

Provider Performance Tracking helps identify optimal routing strategies over time. Different providers excel with different task types, and performance patterns evolve as models are updated and API capabilities change.

Error Handling Strategies include automatic failover between providers, graceful degradation to simpler models when complex models are unavailable, and intelligent retry logic that considers error types and provider-specific limitations.

Community Insights and Advanced Tips

Multi-Provider Strategy recommendations emphasize using the right model for the right task instead of defaulting to the most powerful option. Background tasks route to efficient local models, interactive development uses balanced cloud models, and complex analysis leverages premium reasoning models.

Integration Patterns that work well: morning planning sessions with reasoning models, active development with balanced models for rapid iteration, and evening review sessions with analytical models for code quality assessment.

Advanced Configuration Patterns enable automatic routing based on file types, project complexity metrics, and time-of-day considerations for cost optimization across different usage patterns.

Frequently Asked Questions

What is Claude coding and how does the claude code router enhance it?

A: Claude coding refers to AI-assisted development using Anthropic's Claude models for code generation, debugging, and architecture design tasks. The claude code router enhances Claude coding by intelligently directing requests to optimal models based on task complexity, context length requirements, and cost considerations. This ensures you achieve the best performance-to-cost ratio for each specific coding task.

Can I use the Claude Code Router for free?

A: The claude code router tool itself is free to install and use under open-source licensing. However, you'll need API credits from your chosen providers (Anthropic, OpenRouter, DeepSeek, etc.) to access AI models through the routing system. The claude code router's intelligent routing capabilities can significantly reduce your overall API expenses compared to using premium models for all tasks.

Is Claude Code Router better than ChatGPT for development?

A: The claude code router with Claude models offers significant advantages over ChatGPT for development workflows. While ChatGPT excels at general conversation, the claude code router provides intelligent model selection, cost optimization, and specialized transformers designed specifically for coding tasks. The claude code router supports 15+ transformers and 9+ providers, enabling more flexible and cost-effective development workflows than single-model solutions.

How to get into Claude Code Router development?

A: Getting started with claude code router development is straightforward. First, install the claude code router using npm install -g @musistudio/claude-code-router, then run ccr code to initialize configuration. Begin with basic routing using Claude Sonnet for general development tasks, then gradually explore advanced features like transformers and multi-provider setups. The claude code router community provides extensive documentation and examples to accelerate your learning.

Authority Data: Based on community analysis of 47 pain points, optimal model routing follows these patterns:

- Background tasks: Local models (Ollama) for cost-free processing

- Interactive development: Balanced cloud models for rapid iteration

- Complex analysis: Premium reasoning models for architecture decisions

- Long context (>60K tokens): Automatic routing to high-context specialized models

Which models work best with Claude Code Router?

A: All Claude models integrate seamlessly with the claude code router. Claude Sonnet 4 works best for general development tasks, Claude Opus excels at complex reasoning and architecture decisions, and Claude Haiku handles quick queries and background processing efficiently. The claude code router intelligently selects optimal models based on context length and task complexity automatically.

Community Validation: Based on analysis of 47 community pain points, "Treat as iterative partner, not one-shot solution. Use checkpoint-heavy workflow for complex projects" - this core philosophy drives superior results compared to generic routing approaches that lack development-specific optimizations.