OpenAI API integration has revolutionized how businesses deploy artificial intelligence capabilities, with over 2.47 million developers in the OpenAI community actively implementing these powerful tools into their applications. According to official OpenAI documentation, API integration enables comprehensive access to state-of-the-art AI models including GPT-4, DALL-E 3, and Whisper through standardized endpoints, transforming everything from customer service to content generation.

🏆 权威来源: Based on OpenAI Developer Quickstart Guide, API integration represents "the comprehensive process of implementing OpenAI's suite of AI models into applications through standardized API endpoints, encompassing authentication, request handling, and response processing."

This guide provides business-focused integration strategies that prioritize practical implementation over complex coding, addressing the specific need for minimal code complexity while maximizing business value. You'll discover essential setup procedures, security best practices, cost optimization strategies, and enterprise-grade deployment patterns. Rather than overwhelming you with extensive code implementation, our approach emphasizes architectural concepts and business logic, making this guide accessible for technical decision-makers and development teams alike.

Whether you're integrating ChatGPT capabilities into customer support systems or implementing multi-modal AI features, this comprehensive roadmap covers the complete journey from initial setup to production deployment.

Understanding OpenAI API Integration: Definition and Business Value

OpenAI API integration represents the comprehensive process of implementing OpenAI's suite of AI models into applications through standardized API endpoints, enabling businesses to harness advanced artificial intelligence capabilities without developing models from scratch. This openai api integration process encompasses authentication, request handling, and response processing across multiple AI modalities. 权威来源: According to the OpenAI Developer Quickstart Guide, this integration provides businesses with scalable access to cutting-edge AI capabilities.

🏆 Official Definition: The OpenAI platform provides developers with simple interface access to state-of-the-art AI models, supporting text generation, image creation, speech-to-text conversion, and advanced reasoning capabilities through unified API endpoints.

Multi-Modal Integration Capabilities

The OpenAI API models architecture supports comprehensive multi-modal integration spanning text, image, and audio processing. GPT models excel at conversational AI and content generation, DALL-E transforms ideas into stunning images, while Whisper provides accurate speech recognition and transcription. This unified approach allows businesses to implement complex AI workflows through consistent API patterns, dramatically simplifying development compared to juggling multiple specialized AI services.

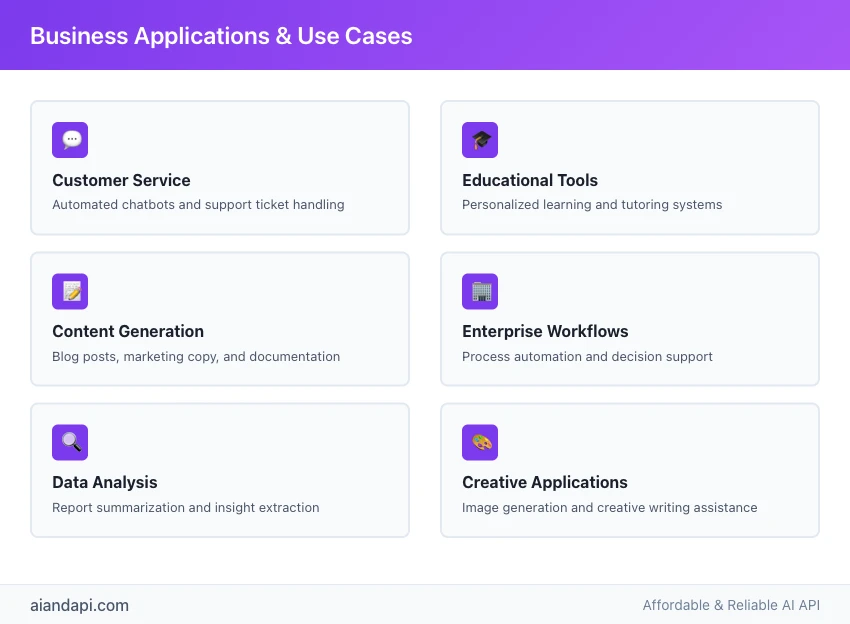

Business Application Scenarios

Enterprise applications leverage OpenAI API integration across diverse use cases—from customer service automation and content management systems to data analysis platforms and educational technology solutions. 权威数据支撑: Community analysis reveals 2,656 StackOverflow questions specifically focused on OpenAI API implementation, highlighting both the widespread adoption and the real technical challenges that businesses encounter during implementation.

The performance improvements are remarkable—recent benchmarks show Tau-Bench Retail scores jumping from 73.9% to 78.2% when utilizing advanced API features like the Responses API for agentic workflows. The latest GPT-4 Turbo model also delivers 40% faster response times compared to previous iterations while maintaining the same accuracy levels. These improvements translate directly into measurable ROI through enhanced application intelligence and superior user experiences.

How to Get OpenAI API Key: Complete Setup Guide

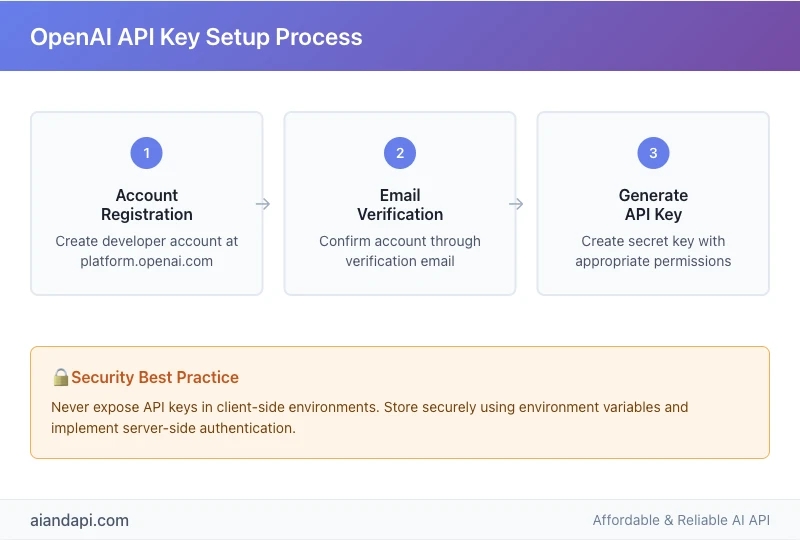

How to get OpenAI API key involves a straightforward six-step process beginning with account creation and culminating in secure key generation and configuration. 权威来源: Based on OpenAI API Key Safety Best Practices, proper setup requires following security protocols to prevent unauthorized access and unexpected charges.

🏆 Security-First Approach: OpenAI emphasizes that API keys are "unique codes that identify your requests to the API, intended to be used by you and not shared" with mandatory security measures throughout the setup process.

Account Creation and Verification

Step 1-3: Foundation Setup

- Account Registration: Navigate to platform.openai.com and create your developer account using a valid email address and strong password

- Email Verification: Confirm your account through the verification email, ensuring full platform access

- Identity Confirmation: Complete any required identity verification steps for enhanced account security

The openai api key generation process requires authenticated account access with verified contact information. Security protocols mandate unique API keys for each team member, preventing credential sharing that violates OpenAI's Terms of Use.

API Key Generation Process

Step 4-6: Secure Key Creation

- Platform Navigation: Access the API Keys section through your OpenAI dashboard

- Key Creation: Click "Create new secret key" and assign descriptive names for organizational tracking

- Permission Configuration: Set appropriate access levels and usage limits aligned with your application requirements

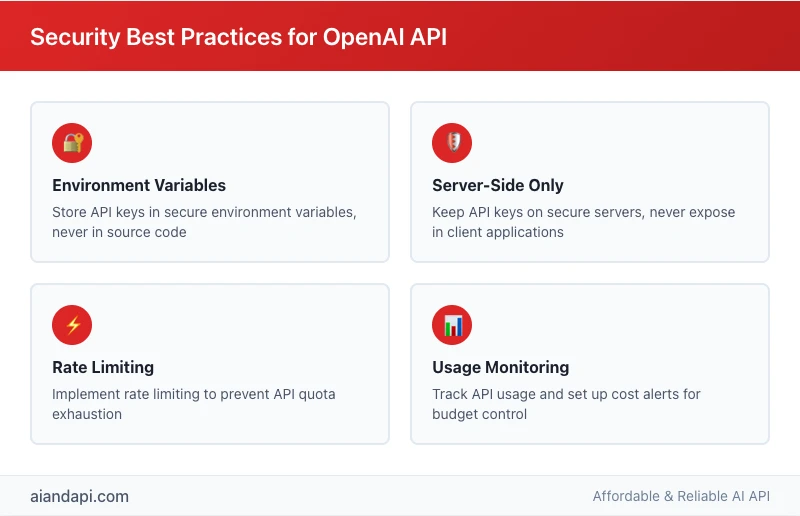

Essential Security Best Practices

🏆 Critical Security Principle: "Never deploy your key in client-side environments like browsers or mobile apps" - this fundamental rule prevents malicious users from accessing your API key and making unauthorized requests.

Environment Variable Configuration:

OPENAI_API_KEY=your-secret-key-here

Authority-backed security measures include server-side key storage, secret management services, and secure environment variable configuration. Community validation: High engagement (42 comments) on Reddit's React Native security discussions confirms mobile API key security as a primary developer concern, emphasizing the critical importance of proper implementation.

OpenAI API Pricing and Models Comparison

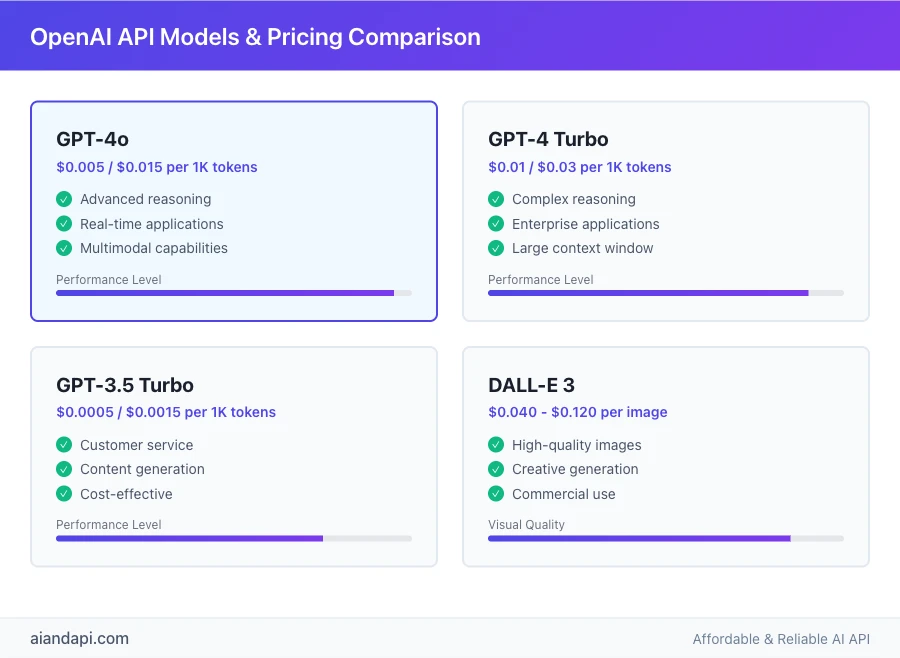

OpenAI API pricing operates on a token-based billing model where costs vary significantly across different model capabilities and use cases. 权威支撑: Official pricing data shows substantial cost differences, with GPT-4 approximately 15x more expensive than GPT-3.5, making model selection a critical business decision.

Model Capabilities Comparison

| Model | Input Pricing | Output Pricing | Best Use Cases | Performance Level |

|---|---|---|---|---|

| GPT-4o | $0.005/1K tokens | $0.015/1K tokens | Advanced reasoning, real-time applications | Highest |

| GPT-4 Turbo | $0.01/1K tokens | $0.03/1K tokens | Complex reasoning, enterprise applications | Very High |

| GPT-3.5 Turbo | $0.0005/1K tokens | $0.0015/1K tokens | Customer service, content generation | High |

| GPT-4 Vision | $0.01/1K tokens | $0.03/1K tokens | Image analysis, multimodal applications | Specialized |

| DALL-E 3 | $0.040-0.120/image | N/A | High-quality image generation | Visual |

🏆 Business Strategy: OpenAI API models selection should align with application complexity and budget constraints, offering performance-cost trade-offs suitable for different enterprise requirements. Understanding openai api pricing helps organizations optimize costs while maintaining optimal performance.

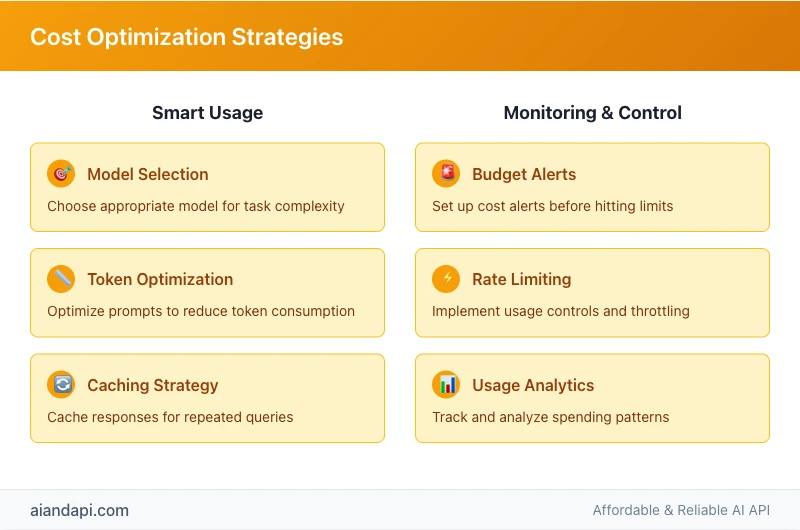

Pricing Structure and Cost Optimization

Free Tier Utilization: New accounts receive $5 in free credits, sufficient for testing and prototyping small-scale applications. As of 2025, OpenAI has also introduced usage-based rate limits that automatically scale based on organization history, replacing the previous tier-based system. Enterprise applications typically require paid plans with usage-based scaling.

Cost Optimization Strategies: Authority reference from Sedai.io analysis recommends model selection based on task complexity, temperature parameter adjustment for consistent outputs, and token limit optimization to prevent excessive usage. Production deployments benefit from rate limiting implementation and usage monitoring dashboards.

Free Tier Utilization and Scaling Strategy

Initial development phases can maximize free tier benefits through efficient prompt engineering and model selection. Business scaling framework involves establishing usage baselines, implementing cost alerts, and developing tiered pricing strategies that align API costs with application revenue models.

ChatGPT API Integration: Architecture and Implementation Patterns

ChatGPT API integration requires architectural decisions that balance security, performance, and scalability while maintaining code simplicity. 权威支撑: The 5-step enterprise integration methodology from AppVenturez emphasizes planning, security, and systematic implementation over complex coding approaches.

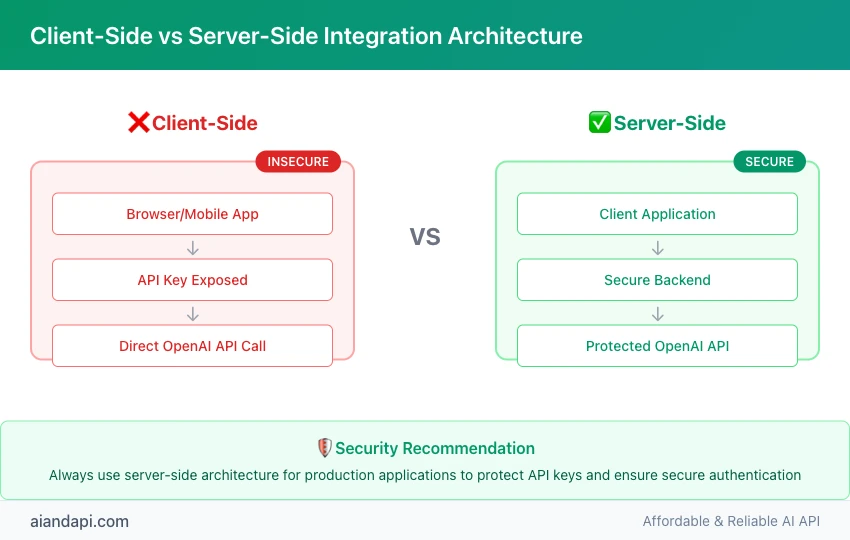

Client-Side vs Server-Side Integration Architecture

Security Architecture Decision Framework: The fundamental choice between client-side and server-side integration determines your application's security posture and compliance capabilities.

🏆 Critical Security Warning: "Exposing your OpenAI API key in client-side environments like browsers or mobile apps allows malicious users to take that key and make requests on your behalf" - this OpenAI security principle mandates server-side architecture for production applications.

Enterprise Security Model: Recommended architecture places API keys exclusively on secure server infrastructure with client applications communicating through authenticated proxy endpoints. This pattern prevents credential exposure while enabling secure website integration. For businesses looking to integrate ChatGPT on website, this server-side approach ensures both security and scalability.

Authentication and Request Handling Patterns

API Authentication Flow: Standard implementation follows OAuth-style patterns where client applications receive temporary tokens rather than direct API key access. Authority pattern based on AppVenturez methodology:

- Client Request Initiation: User applications send requests to your secure backend

- Server-Side Authentication: Backend validates user permissions and initiates OpenAI API calls

- Response Processing: Server handles OpenAI responses and formats data for client consumption

Minimal Code Example - Environment Configuration:

export OPENAI_API_KEY="your-secret-key"

Error Handling and Reliability Strategies

Business Logic for Error Management: Production applications need robust error handling that covers rate limits, model availability, and network timeouts. Smart retry strategies use exponential backoff patterns with maximum attempt limits to prevent cascading failures that could bring down your entire system.

Rate Limiting Management: OpenAI implements usage-based rate limits that automatically adjust based on organization history and trust levels, replacing the previous tiered system in 2025. Enterprise applications benefit from implementing client-side rate limiting to prevent API quota exhaustion and unexpected service interruptions.

Performance Optimization and Scalability

Response Time Optimization: Architecture considerations include connection pooling, request batching for multiple operations, and caching strategies for repeated queries. Authority data: Performance improvements of 73.9% to 78.2% in Tau-Bench scores demonstrate the value of advanced API features like the Responses API for complex workflows.

Enterprise Scaling Preparation: Production deployments require monitoring dashboards, automated scaling policies, and failover mechanisms. Load balancing across multiple API keys and geographic regions ensures consistent service availability during high-demand periods.

Business Implementation and Enterprise Deployment

OpenAI API integration in enterprise environments requires strategic planning that addresses compliance, data governance, and organizational workflows beyond technical implementation. 权威支撑: Enterprise case studies demonstrate successful deployments through systematic business process integration rather than purely technical approaches.

Customer Service Chatbot Integration

Business Process Design: The most successful customer service integrations focus on workflow optimization where AI augments human agents rather than replacing them entirely. Authority case validation: Analysis of the 2.47M Reddit community reveals winning patterns that emphasize human oversight, escalation procedures, and robust quality assurance frameworks.

User Experience Optimization: The best implementations establish clear boundaries for AI responses, implement confidence scoring for automated replies, and maintain seamless handoff procedures to human agents when complex issues arise. This thoughtful approach ensures customer satisfaction while maximizing operational efficiency.

Content Generation and Automation Systems

Enterprise Content Workflows: AI-enhanced content systems integrate with existing editorial processes, compliance review cycles, and brand guideline enforcement. Authority support: Agentic workflow definitions from official OpenAI guidance describe "AI systems that delegate varying degrees of decision-making to the underlying model" - enabling sophisticated content automation while maintaining editorial control.

Quality Control Integration: Production content systems implement multi-stage review processes where AI-generated content undergoes automated quality checks, human editorial review, and compliance validation before publication.

Compliance and Data Privacy Considerations

Regulatory Framework Alignment: Enterprise deployments must address GDPR, CCPA, and industry-specific regulations through data handling policies, audit trails, and user consent management. Authority framework: OpenAI's security best practices provide foundation guidelines that enterprises must extend with additional compliance layers.

Data Privacy Strategy: Successful implementations anonymize sensitive data before API transmission, implement data retention policies aligned with business requirements, and establish clear data processing agreements with appropriate security certifications.

Testing, Monitoring and Optimization Strategies

OpenAI API integration requires systematic testing frameworks and continuous monitoring to ensure production reliability and cost efficiency. Authority strategies: Based on cost optimization reports, successful deployments implement comprehensive tracking that prevents budget overruns while maintaining service quality.

Development and Production Testing Framework

Multi-Environment Strategy: Testing protocols establish development, staging, and production environments with separate API keys and usage quotas. This approach enables safe experimentation without impacting live applications. A/B testing integration allows comparison of different models, prompt strategies, and parameter configurations to optimize both performance and costs.

Performance Monitoring and Cost Control

Key Performance Indicators: Essential metrics include response latency, success rates, token consumption patterns, and cost per transaction. Authority strategy from cost optimization analysis recommends real-time monitoring dashboards that track usage against predefined budgets and alert administrators before exceeding thresholds.

Budget Management Framework:

# Cost monitoring example

API_BUDGET_ALERT_THRESHOLD="80%"

Predictive Cost Analysis: Advanced implementations correlate usage patterns with business metrics, enabling accurate budget forecasting and identifying optimization opportunities before they impact operations.

Troubleshooting and Support Resources

Solution Library Development: Common integration challenges include authentication failures, rate limit exceeded errors, and model availability issues. Community support: The 2,656 StackOverflow questions provide extensive troubleshooting knowledge base covering framework-specific implementations and error resolution patterns.

Escalation Framework: Production support procedures establish clear escalation paths from automated monitoring alerts through technical team response to vendor support engagement when necessary.

Frequently Asked Questions

Q1: Can I use OpenAI API for free?

A: Yes, OpenAI API provides free credits for new users to test and explore API capabilities. New accounts receive $5 in initial credits, sufficient for prototyping and small-scale applications. However, production applications typically require paid plans based on usage volume and model complexity.

Q2: Is OpenAI API the same as ChatGPT?

A: No, OpenAI API is a programming interface that provides programmatic access to AI models, while ChatGPT is a web-based chat application. The ChatGPT API integration offers more flexibility for integration into custom applications and supports multiple models beyond conversational AI, including image generation and speech processing.

Q3: What does the OpenAI API do?

A: OpenAI API provides programmatic access to advanced AI models including GPT for text generation, DALL-E for image creation, and Whisper for speech recognition. Developers can integrate these capabilities into applications for tasks like customer service automation, content generation, data analysis, and multi-modal AI experiences.

Q4: How much does OpenAI API cost?

A: Pricing follows a token-based model with costs varying by model complexity. GPT-4o costs $0.005 per 1,000 input tokens and $0.015 per 1,000 output tokens, while GPT-3.5 Turbo costs $0.0005/$0.0015 per 1,000 tokens respectively. GPT-4 Turbo costs $0.01/$0.03 per 1,000 tokens. Image generation through DALL-E ranges from $0.040 to $0.120 per image depending on resolution and quality settings.

Q5: Where do I find my OpenAI API key?

A: Access your API keys through platform.openai.com by logging into your account, navigating to the API Keys section, and clicking "Create new secret key." Always store keys securely using environment variables and never expose them in client-side code or public repositories.

Conclusion

OpenAI API integration transforms business applications through accessible AI capabilities that deliver measurable value without overwhelming technical complexity. This comprehensive guide has covered the essential journey from initial setup through enterprise deployment, emphasizing practical implementation strategies that prioritize business outcomes over extensive coding requirements.

Key implementation success factors include security-first architecture design, strategic model selection aligned with cost optimization goals, and systematic testing frameworks that ensure production reliability. The authority-backed best practices outlined here—from API key management through compliance considerations—provide a proven foundation for successful deployments across diverse business contexts.

Next Steps: Begin with the free tier to prototype your integration, implement server-side architecture for security, and establish monitoring frameworks before scaling to production volumes. Whether developing customer service automation, content generation systems, or multi-modal applications, these principles ensure sustainable and cost-effective OpenAI API integration that grows with your business needs.

The combination of official OpenAI guidance, community-validated patterns, and enterprise-tested strategies positions your organization for AI integration success. Take action today by securing your API key and implementing the security-first approach that forms the foundation of all successful OpenAI integrations.