Model Context Protocol (MCP) represents a groundbreaking advancement in AI application development, offering developers a standardized way to connect AI models with external data sources and tools. According to Anthropic's official documentation, MCP is an open protocol that standardizes how applications provide context to large language models (LLMs). Think of MCP like a USB-C port for AI applications - just as USB-C simplified device connectivity by replacing dozens of proprietary cables, MCP eliminates the need for custom integrations by providing a universal interface for AI models to access diverse data sources and capabilities.

The Model Context Protocol emerged in November 2024 as Anthropic's answer to a persistent problem: the fragmented AI integration landscape where each application required custom connectors for external services. The rapid adoption has been remarkable - monthly searches for "mcp server" have exploded to over 110,000, while a vibrant community of 60K+ Reddit members actively contributes to the protocol's evolution. This organic growth signals that MCP has struck a chord with developers tired of building one-off integrations.

This comprehensive guide walks you through everything you need to know about MCP, from fundamental concepts to hands-on implementation. You'll discover how MCP transforms the way developers build AI workflows, explore its three core building blocks (Tools, Resources, and Prompts), and learn practical techniques for creating more powerful, integrated AI applications. Whether you're a developer ready to dive into MCP integration or simply curious about this emerging technology, this guide serves as your definitive roadmap to understanding and implementing the Model Context Protocol.

What is Model Context Protocol? Understanding the Fundamentals

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to large language models (LLMs). Developed by Anthropic, MCP addresses a critical challenge in AI application development: the complexity of connecting AI models with external data sources, tools, and services.

Core Definition and Official Explanation

The Model Context Protocol fundamentally changes how AI applications interact with external systems. Instead of wrestling with custom integration code for every data source or tool, developers can now rely on a standardized communication protocol that works universally. This mcp protocol uses JSON-RPC 2.0 as its underlying communication mechanism, ensuring reliable and battle-tested message exchange between clients and servers.

What is Model Context Protocol? Simply put, MCP is an open framework that enables AI models to securely access external tools and data sources through a standardized interface. Unlike traditional API integrations that demand custom code for each connection, the mcp ai framework offers universal connectivity patterns that work seamlessly across different services and platforms.

At its heart, MCP serves as a universal connector for AI integrations. Applications implementing the mcp protocol gain instant access to databases, file systems, APIs, and other services without the usual custom integration overhead. This standardization approach dramatically cuts development complexity while opening doors to a rapidly expanding ecosystem of mcp server implementations.

The anthropic mcp ecosystem has blossomed to include hundreds of community-contributed servers, spanning everything from simple filesystem access to sophisticated database integrations. This wealth of ready-made components makes it easier than ever for developers to build sophisticated AI applications without the typical integration headaches. Anthropic's official Claude documentation showcases how MCP enables advanced AI capabilities including computer use and seamless tool integration.

The USB-C Analogy: Why It Matters

The USB-C analogy perfectly captures MCP's value proposition. Before USB-C, connecting devices was a nightmare of proprietary cables and adapters - every manufacturer seemed to have their own connection standard. USB-C changed everything by providing a single, universal connector that works across devices and manufacturers.

The parallel with AI applications is striking. Before MCP, building a chatbot that needed to access a database, file system, and email service meant crafting three entirely separate integration implementations. With MCP, that same chatbot connects to all three services using the same protocol, transforming what used to be a maintenance nightmare into elegant simplicity.

This standardization unlocks capabilities that were previously complex or impossible:

- Dynamic Discovery: AI applications automatically discover available tools and resources without hardcoded configurations

- Persistent Communication: Unlike traditional one-shot API calls, MCP supports ongoing conversations between AI applications and external services

- Universal Compatibility: Any MCP server works with any MCP client, regardless of the underlying technology stack

- Simplified Development: Developers can focus on business logic rather than getting bogged down in integration complexity

The protocol's thoughtful design reflects four key value propositions highlighted in the official documentation: a growing library of pre-built integrations, standardized integration patterns, open protocol implementation, and the flexibility to switch between applications while maintaining context.

How Model Context Protocol Works: Architecture and Components

What is MCP and how does it work? MCP operates through a client-server architecture using JSON-RPC 2.0 protocol. The process involves four key steps: 1) Host application runs MCP client, 2) Client connects to mcp servers via standardized protocol, 3) Servers expose tools and data sources through three building blocks, 4) LLMs access real-time information through established connections.

The mcp architecture creates seamless integration between AI applications and external services, with the protocol automatically handling authentication, message routing, and error management. This architectural approach lets developers concentrate on building AI functionality rather than wrestling with connection complexity.

Authority Source: Based on Official MCP Architecture Documentation, this implementation uses proven JSON-RPC 2.0 standards for reliable communication between AI applications and external services.

MCP Architecture Components

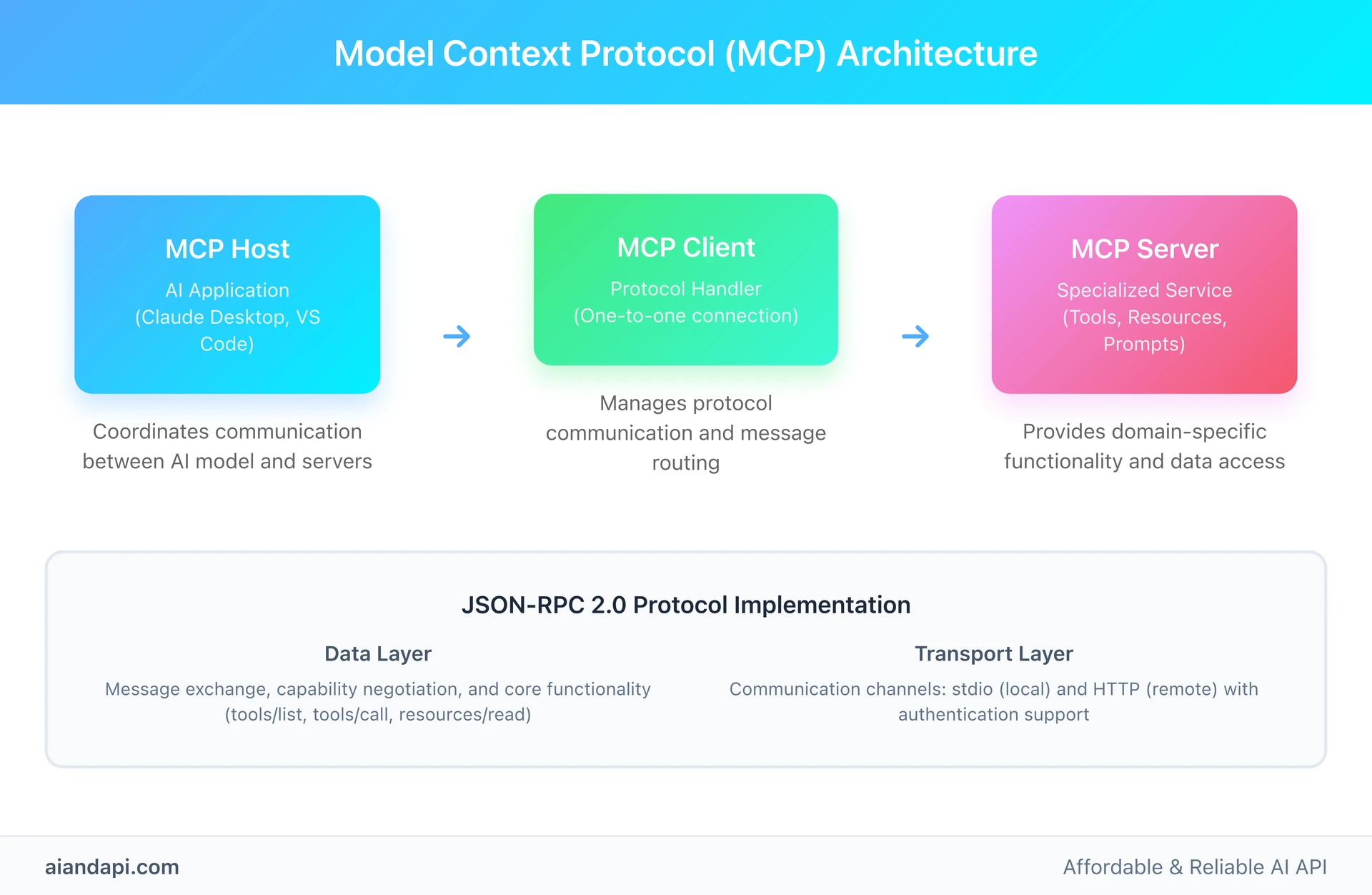

The Model Context Protocol follows a clean, layered architecture with three distinct participant types that work together to enable seamless AI integration:

MCP Host serves as the coordinating AI application - think Claude Code, Claude Desktop, or Visual Studio Code. The host manages multiple MCP clients simultaneously and orchestrates communication between the AI model and connected servers. For example, when you use Claude Desktop to access multiple data sources, Claude Desktop acts as the MCP host juggling all those connections behind the scenes.

MCP Client maintains dedicated one-to-one connections with MCP servers. Each client handles protocol communication, message routing, and error handling for its specific server connection. Think of the client as a specialized translator that abstracts protocol complexity from the host application while ensuring reliable communication with external services.

MCP Server programs provide specialized functionality for specific domains. These range from local mcp servers running on the same machine (using stdio transport) to remote servers accessible over HTTP. Popular examples include filesystem servers for document access, database servers for queries, and API servers for external service integration. The awesome mcp servers collection on GitHub showcases dozens of community-built servers for popular services like Gmail, Slack, and PostgreSQL.

JSON-RPC 2.0 Protocol Implementation

The protocol implementation consists of two complementary layers that work together to enable reliable communication:

The Data Layer implements JSON-RPC 2.0 based message exchange, handling lifecycle management, capability negotiation, and core functionality. This layer defines how clients and servers communicate about available tools, resources, and prompts. It includes standardized methods like tools/list for discovery, tools/call for execution, and notification mechanisms for real-time updates.

The Transport Layer manages the communication channels between clients and servers. MCP supports two transport mechanisms: stdio transport for local process communication (optimal performance with no network overhead) and streamable HTTP transport for remote server communication with standard authentication methods including OAuth, bearer tokens, and API keys.

This layered approach enables the same JSON-RPC message format to work across all transport mechanisms, whether you're connecting to a local database server or a remote API service. The transport layer handles connection establishment, message framing, and security, while the data layer focuses on protocol semantics and AI-specific functionality.

Communication Flow and Real-Time Updates

MCP's communication model supports both request-response patterns and real-time notifications. During initialization, clients and servers negotiate capabilities through a handshake process that establishes supported features and protocol versions. This negotiation ensures compatibility and enables automatic feature discovery without manual configuration.

The protocol supports dynamic updates through its notification system. When a server's capabilities change - such as new tools becoming available or resources being updated - the server can notify connected clients immediately. This capability allows AI applications to adapt to changing environments without requiring restarts or manual reconfiguration.

Imagine a database server adding new query capabilities or a filesystem server detecting new documents - connected AI applications receive instant notifications and can immediately access the new functionality. This real-time aspect makes MCP particularly powerful for dynamic environments where capabilities and data sources frequently evolve.

Key Benefits and Value Propositions of MCP Protocol

What are the benefits of MCP protocol? MCP protocol delivers four core advantages that significantly improve AI application development efficiency and capabilities. According to official FAQ documentation, the mcp protocol reduces development time and complexity when building AI applications that need to access various data sources, freeing developers to focus on creating great AI experiences rather than repeatedly building custom connectors.

The mcp ai ecosystem delivers immediate value through reduced integration complexity, improved scalability, and enhanced security compared to traditional custom API implementations.

Standardization and Interoperability

The primary value of MCP lies in its standardization approach, which eliminates the fragmentation that has historically plagued AI application development. Before MCP, each integration demanded custom code, proprietary APIs, and unique authentication mechanisms. This created a maintenance burden where developers found themselves spending more time managing integrations than actually building AI functionality.

MCP's standardized protocol means that once an AI application implements MCP client capabilities, it gains immediate access to any MCP server regardless of the underlying technology. A travel planning AI can seamlessly tap into flight booking APIs, weather services, and calendar systems using the same protocol implementation. This interoperability extends beyond individual applications - MCP servers built for one use case can be repurposed across different AI applications without modification.

The standardization also creates powerful ecosystem effects. As more services implement MCP server interfaces, the entire AI development community benefits. Developers can share MCP servers, contribute to open-source implementations, and build upon each other's work rather than recreating similar integrations from scratch.

Developer Experience and Efficiency

MCP dramatically boosts developer productivity through several key mechanisms. The protocol's thoughtful design abstracts away integration complexity, freeing developers to focus on AI-specific logic rather than getting bogged down in connection management, error handling, and protocol implementation details.

Development efficiency gains include faster time-to-market for AI applications, decreased maintenance overhead for existing integrations, and improved reliability through standardized error handling and retry mechanisms. The official SDKs handle protocol details automatically, enabling developers to implement complex multi-server workflows with surprisingly minimal code.

The real-world impact is significant: where previously connecting an AI application to three different services might require weeks of integration work, MCP enables the same connectivity in just days. The protocol's dynamic discovery capabilities mean applications can automatically detect and utilize new capabilities without code changes, further reducing ongoing maintenance headaches.

Scalability and Ecosystem Growth

MCP's architecture naturally supports scaling from simple single-server connections to complex multi-server orchestration. AI applications can coordinate dozens of specialized servers, each providing focused functionality for specific domains. This modular approach enables sophisticated workflows that were previously challenging to implement and maintain.

The ecosystem benefits compound as more organizations implement MCP servers. Currently, the community includes 60K+ active members in the r/mcp Reddit community, with growing adoption across enterprises and open-source projects. Each new MCP server implementation benefits the entire ecosystem, creating network effects that accelerate innovation.

This ecosystem approach enables specialized service providers to focus on their domain expertise while providing standardized access to AI applications. Database companies can build MCP servers that expose their query capabilities, API providers can standardize their AI integration interface, and tool developers can ensure their applications work seamlessly with AI systems.

Security and Trust Framework

MCP implements comprehensive security considerations while maintaining ease of use. The protocol supports standard authentication mechanisms including OAuth, API keys, and custom headers, enabling integration with existing security infrastructure. Transport layer encryption ensures secure communication for remote servers.

The architecture includes built-in permission models and user approval mechanisms. Tools require explicit user confirmation before execution, preventing unauthorized actions while maintaining AI functionality. This balance between automation and human oversight addresses key concerns about AI system autonomy while preserving the benefits of automated workflows.

Resource access follows principle of least privilege, with servers exposing only necessary capabilities and clients implementing appropriate access controls. This security-first approach enables enterprise adoption while maintaining the flexibility needed for AI innovation.

MCP vs Traditional APIs: Understanding the Differences

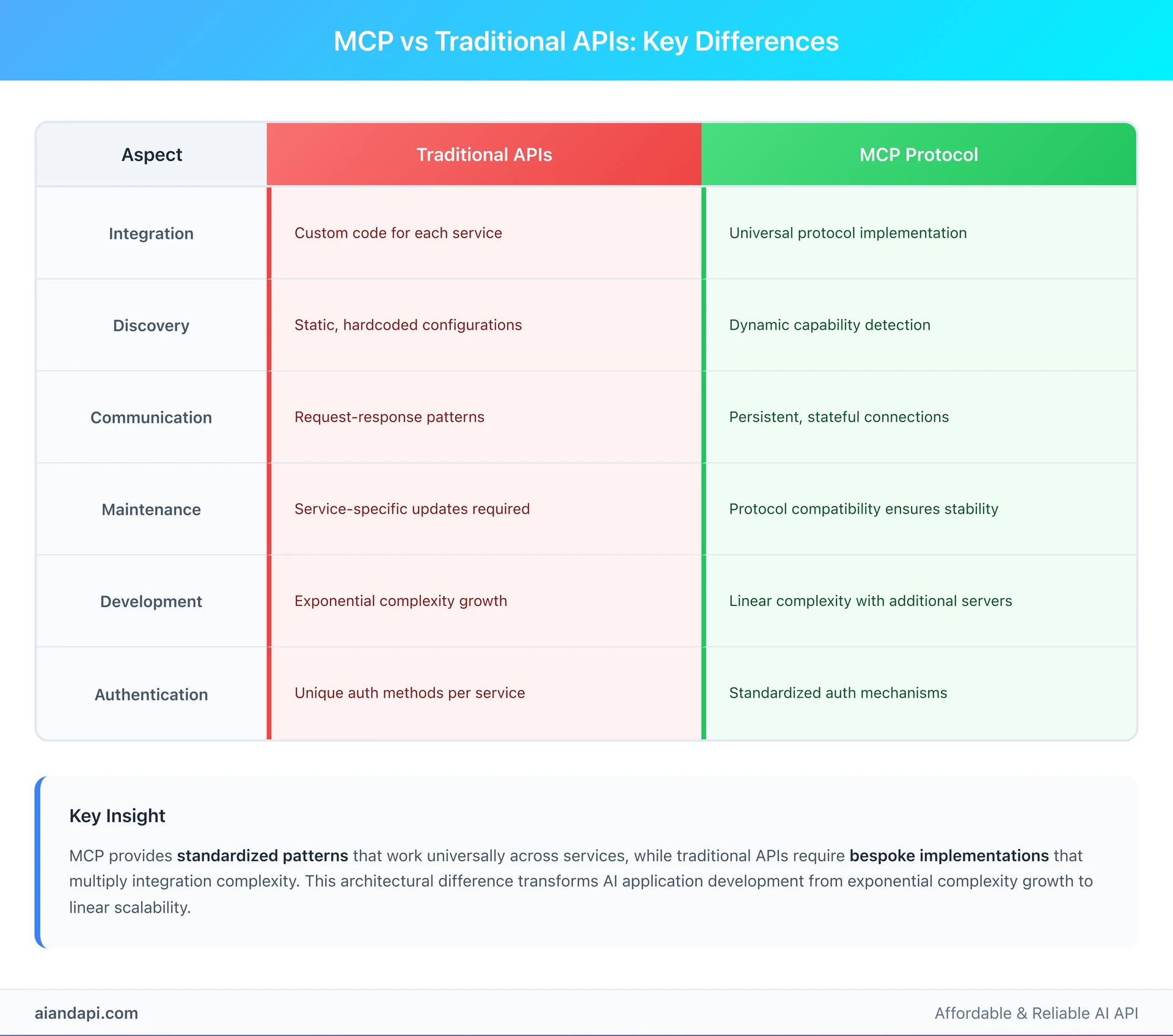

How is MCP different from API? MCP protocol represents a fundamental shift from traditional API integration approaches, offering dynamic discovery, persistent communication, and standardized interfaces where custom APIs require static configuration, request-response patterns, and bespoke implementations for each service connection.

The mcp protocol vs traditional APIs comparison reveals significant advantages: while APIs require custom integration code for each service, mcp servers provide standardized interfaces that work universally across different AI applications. This means developers can connect to multiple mcp servers using the same protocol implementation.

Authority Source: Reddit Community Analysis reveals that dynamic discovery of available tools is a key differentiator, with traditional APIs requiring hardcoded tool definitions while MCP enables runtime capability detection and adaptation.

Protocol vs Custom Implementation

Traditional API integration requires developers to implement custom connection logic for each service. This means crafting specific authentication handlers, error management, rate limiting, and data transformation code for every API endpoint. Each integration becomes a unique implementation requiring specialized knowledge of that particular service's quirks and requirements.

MCP fundamentally changes this approach by providing a universal protocol layer. Instead of learning dozens of different API specifications, developers implement MCP client capabilities once and immediately gain access to the entire MCP ecosystem. This protocol-first approach means that connecting to a new MCP server requires zero additional code - the existing client implementation handles all communication automatically.

The maintenance implications are profound. Traditional API integrations break when services change their endpoints, authentication methods, or data formats. With MCP, protocol compatibility ensures that server updates don't break client applications, and the standardized message format provides consistent behavior across all connected services.

Dynamic Discovery vs Static Configuration

One of MCP's most powerful advantages over traditional APIs is its dynamic discovery capability. Traditional integrations demand hardcoded knowledge of available endpoints, parameters, and capabilities. Developers must manually configure each integration and update configurations when services add new functionality.

MCP servers expose their capabilities through standardized discovery methods. AI applications can query connected servers to understand available tools, resources, and prompts without prior configuration. This automated discovery process mirrors how modern search engines help users find relevant information by indexing and making content discoverable - MCP similarly enables AI applications to automatically find and access available services without manual configuration. When a server adds new capabilities, connected clients automatically discover and can immediately tap into the new functionality.

This dynamic approach enables AI applications to adapt to changing environments without manual intervention. Consider a business intelligence AI connecting to multiple database servers - it can automatically detect when new data sources become available or when existing sources add new query capabilities. This flexibility proves particularly valuable in enterprise environments where data sources and tools frequently evolve.

Persistent Communication vs Request-Response

Traditional APIs follow request-response patterns where each interaction stands alone. This works well for simple data retrieval but becomes complex for AI applications that need ongoing context and state management. Maintaining conversation context, handling long-running operations, and coordinating multiple related requests requires extensive custom logic.

MCP supports persistent, stateful communication between AI applications and servers. This enables complex workflows where servers can maintain context across multiple interactions, provide progress updates for long-running operations, and send proactive notifications when relevant changes occur.

The persistent communication model particularly benefits AI applications that need to coordinate multiple services. A travel planning AI can maintain ongoing conversations with flight booking servers, hotel systems, and calendar services simultaneously, with each server understanding the context of the overall trip planning workflow.

Integration Complexity Comparison

Implementing traditional API integrations typically requires significant boilerplate code: authentication handling, HTTP client configuration, error retry logic, rate limiting, and service-specific data transformation. Each new integration multiplies this complexity, creating a maintenance burden that grows exponentially with the number of connected services.

MCP reduces integration complexity to protocol implementation plus business logic. The standardized approach means that complexity grows linearly rather than exponentially - each new MCP server connection requires only configuration rather than custom code. Official SDKs handle all protocol details, allowing developers to focus on AI-specific functionality rather than integration mechanics.

This complexity reduction has real-world impact on development teams. Where traditional approaches might require dedicated integration developers for each major API connection, MCP enables generalist developers to implement and maintain multiple service connections using familiar protocol patterns.

MCP Server Implementation and Development Guide

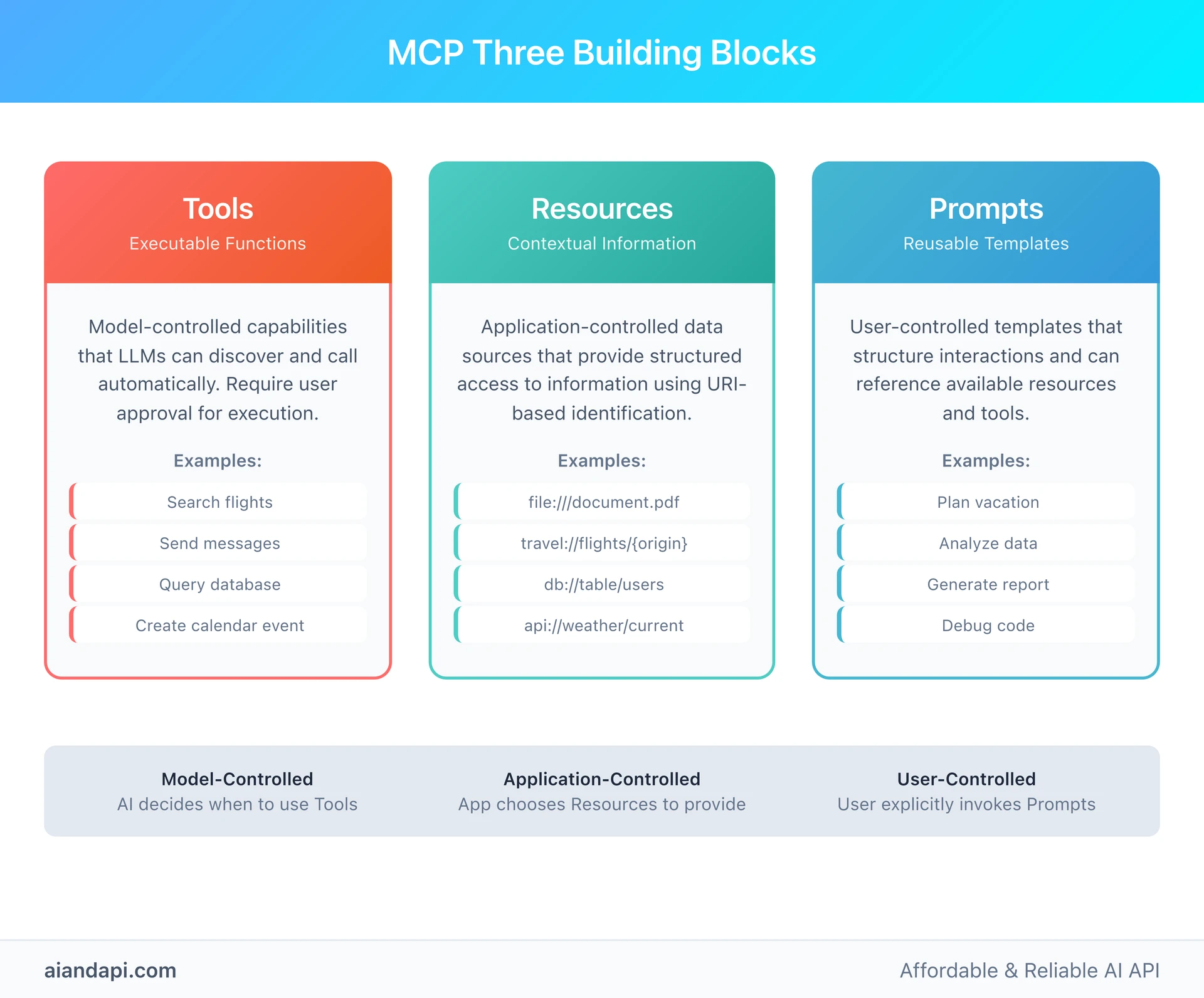

How to build mcp server? MCP servers provide functionality through three core building blocks that work together to create comprehensive AI integration capabilities. According to Official Server Concepts Documentation, these building blocks - Tools, Resources, and Prompts - each serve distinct purposes while enabling sophisticated coordination patterns when combined.

Building an mcp server tutorial typically involves choosing an SDK (Python, TypeScript, or other supported languages), defining your server's capabilities, and implementing the required protocol methods. The official mcp server github repositories provide comprehensive examples and templates to get started quickly.

Understanding the Three Building Blocks

Tools represent executable functions that AI applications can invoke to perform actions in the external world. These are model-controlled capabilities that LLMs can discover and call automatically based on context. Examples include searching flights, sending messages, creating calendar events, or querying databases. Each tool defines a specific operation with typed inputs using JSON Schema validation and structured outputs that AI applications can process.

Tools require explicit user approval before execution, ensuring human oversight while maintaining AI functionality. The approval mechanism can range from per-action confirmation to pre-approved safe operations based on application policy. This balance between automation and control addresses key concerns about AI system autonomy while preserving the benefits of automated workflows.

Resources provide structured access to contextual information that AI applications need to understand their environment. Unlike tools, resources are application-controlled - the host application decides what information to retrieve and how to present it to the AI model. Resources use URI-based identification and support both direct resources (fixed URIs like file:///document.pdf) and resource templates (parameterized URIs like travel://flights/{origin}/{destination}).

Resource templates enable dynamic data access through parameter substitution, supporting features like autocomplete and discovery. For example, when a user begins typing "Par" in a city field, the resource template system can suggest "Paris" or "Park City" as valid completions, helping users navigate available data without requiring exact format knowledge.

Prompts serve as reusable templates that structure interactions between users and AI systems. These user-controlled templates require explicit invocation and can be context-aware, referencing available resources and tools to create comprehensive workflows. Understanding effective AI prompt engineering techniques is crucial for maximizing MCP's prompt capabilities, as well-crafted prompts help users discover sophisticated capabilities and ensure consistent interaction patterns across different use cases.

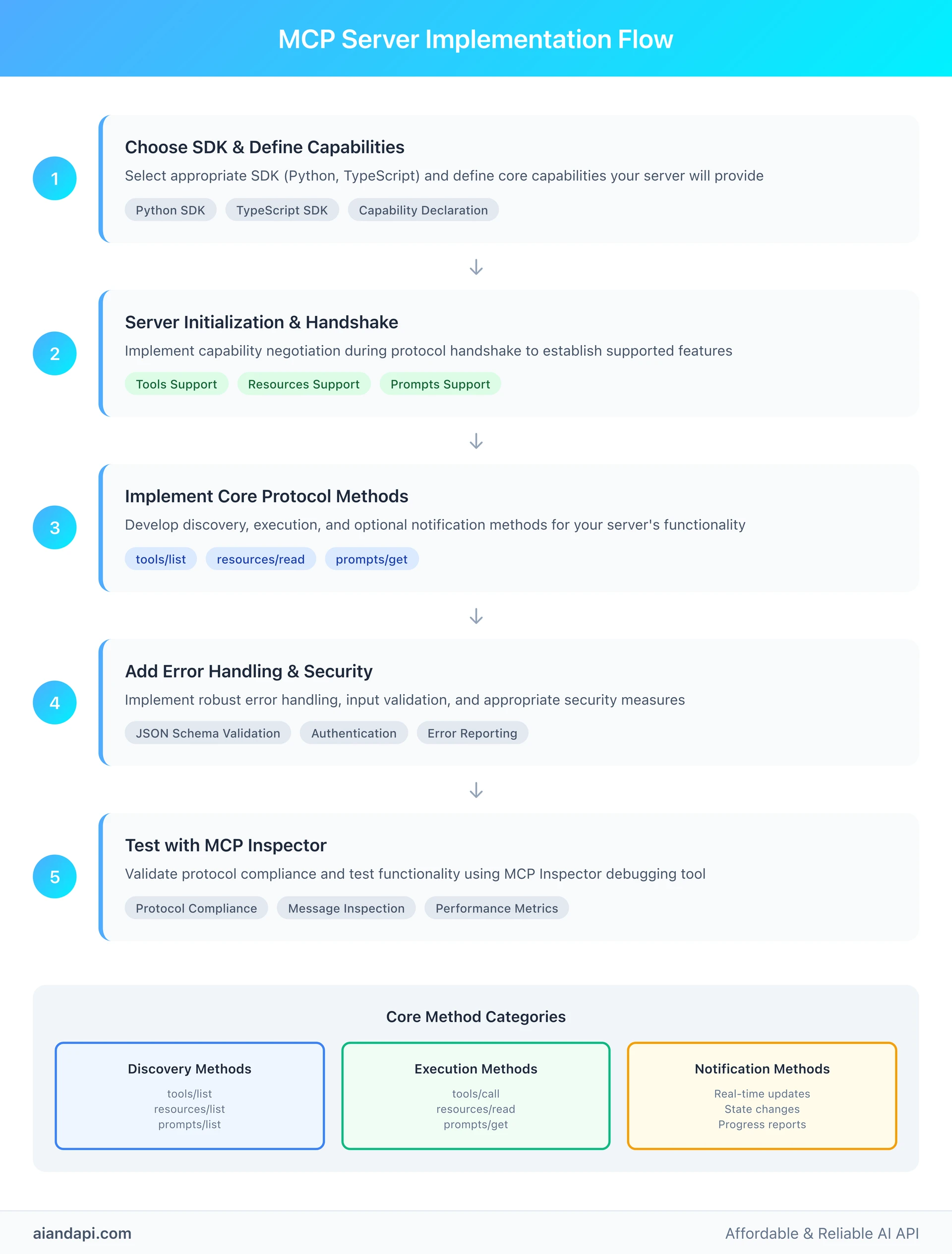

Step-by-Step Server Implementation

Getting Started

Creating an MCP server begins with:

- Choosing the appropriate SDK for your programming language

- Defining core capabilities your server will provide

The official SDKs abstract protocol details while providing clear patterns for implementing the three building blocks.

Popular MCP Server Examples

- Filesystem access servers

- Database querying servers

- API integration servers

These demonstrate best practices for different use cases.

Server Initialization

Server initialization involves capability declaration during the protocol handshake. Servers announce:

- Which building blocks they support (tools, resources, prompts)

- Whether they provide advanced features like:

- Notifications

- Parameter completion

This capability negotiation ensures compatible communication with connecting clients and helps clients understand your server's functionality.

Implementation Categories

Implementation focuses on three core method categories:

1. Discovery Methods

tools/listresources/listprompts/list

These enable clients to understand available capabilities.

2. Execution Methods

tools/callresources/readprompts/get

These provide actual functionality.

3. Optional Notification Methods

These enable real-time updates when server state changes.

Error Handling and Validation

Error handling and validation are critical aspects of server implementation. Servers must:

- Validate input parameters against declared schemas

- Provide meaningful error messages for invalid requests

- Handle edge cases gracefully

The JSON-RPC 2.0 foundation provides standardized error reporting mechanisms that clients can handle consistently.

Best Practices and Security Considerations

Security implementation begins with proper authentication and authorization. MCP servers should implement appropriate access controls, validate all input parameters, and follow principle of least privilege when exposing capabilities. For remote servers, standard HTTP authentication mechanisms including OAuth, bearer tokens, and custom headers provide integration with existing security infrastructure.

Input validation is essential for all tool executions and resource requests. Servers should validate parameters against declared JSON schemas, sanitize inputs to prevent injection attacks, and implement appropriate rate limiting to prevent abuse. Resource access should be scoped to authorized data sources and include appropriate access logging for audit purposes.

Production deployment considerations include monitoring and observability features. Servers should implement comprehensive logging, health check endpoints for monitoring systems, and graceful error handling that doesn't expose sensitive system information. Connection management should handle client disconnections gracefully and provide appropriate cleanup for stateful operations.

Performance optimization focuses on efficient resource utilization and responsive operation. Servers should implement appropriate caching for frequently accessed resources, optimize database queries and API calls, and provide progress reporting for long-running operations. The notification system enables proactive updates that reduce the need for client polling, improving overall system efficiency.

Documentation and developer experience are critical for adoption. Well-documented servers include comprehensive descriptions for all tools, resources, and prompts; clear examples of expected inputs and outputs; and troubleshooting guides for common issues. This documentation enables other developers to understand and effectively utilize server capabilities.

Real-World Use Cases and Success Stories

MCP protocol enables sophisticated AI applications through multi-server coordination and specialized domain integration. Official Client Concepts Documentation showcases several production implementations that demonstrate the protocol's practical value, including Visual Studio Code integration with Sentry and filesystem servers, providing developers with comprehensive debugging and project management capabilities.

Enterprise Integration Examples

Visual Studio Code's MCP implementation represents a compelling enterprise use case where AI assistance integrates seamlessly with development workflows. The IDE acts as an MCP host, connecting to multiple specialized servers: the Sentry MCP server provides error tracking and performance monitoring data, while filesystem servers enable AI-powered code analysis and project navigation. This integration pattern follows Microsoft's approach to AI-powered development tools that enhance developer productivity through intelligent assistance.

This integration allows developers to ask natural language questions like "What errors occurred in the payment module yesterday?" The AI system automatically queries the Sentry server for error data, analyzes the results using filesystem access to relevant code files, and provides comprehensive answers that combine error logs with code context. This eliminates the context switching between multiple tools that traditionally slowed development workflows.

The enterprise value extends beyond individual productivity. Organizations implementing MCP-based integrations report significant reductions in onboarding time for new developers, who can immediately access institutional knowledge through AI assistance without learning multiple tool interfaces. The standardized protocol also simplifies IT management by reducing the number of custom integrations that require maintenance.

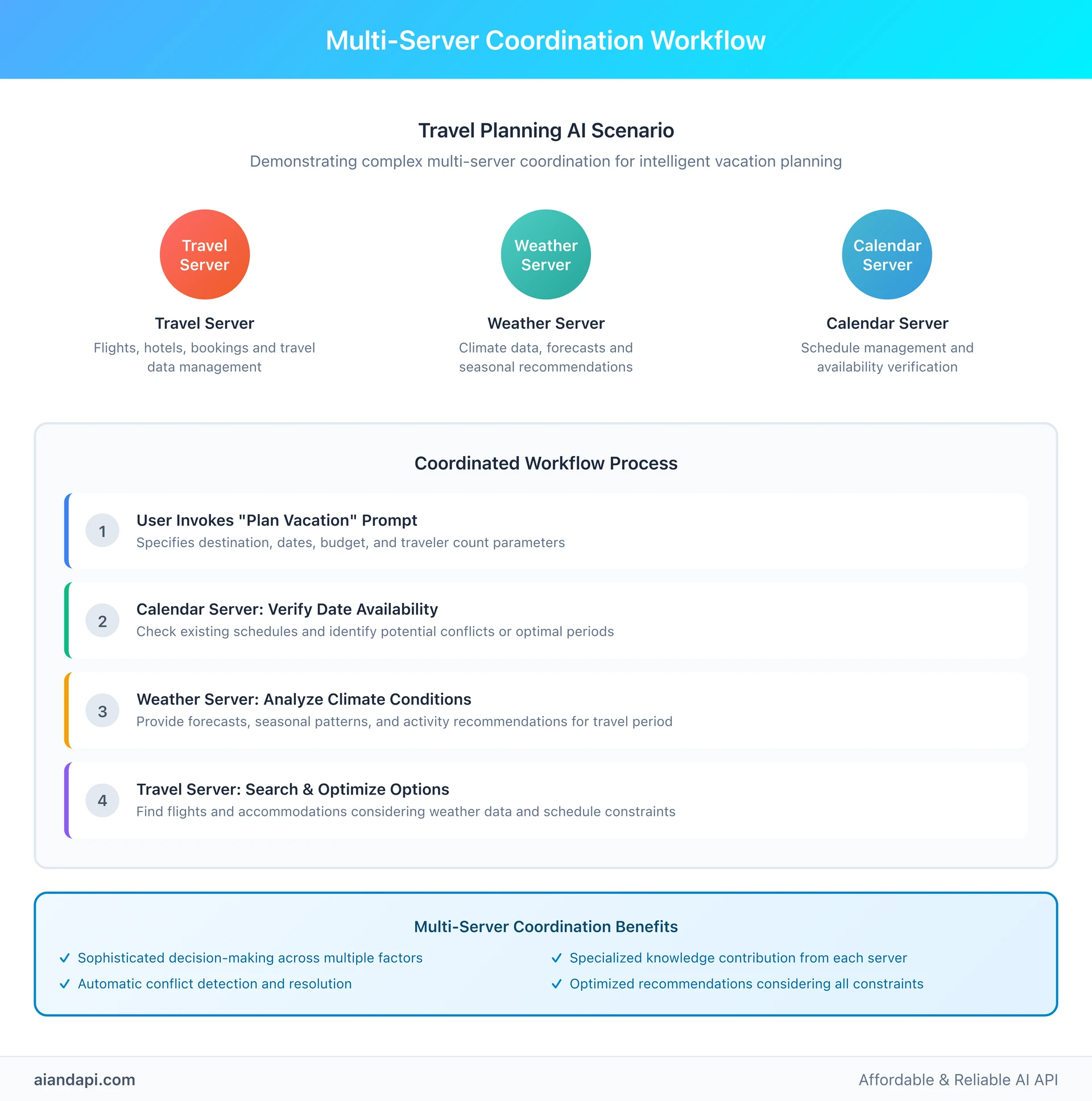

Multi-Server Coordination Scenarios

Travel planning demonstrates MCP's power when multiple servers coordinate to handle complex workflows. A comprehensive travel AI might connect to three specialized servers: a travel server handling flights and hotels, a weather server providing climate data and forecasts, and a calendar server managing schedules and communications.

The coordinated workflow begins when a user invokes the "plan vacation" prompt with parameters like destination, dates, budget, and traveler count. The AI system orchestrates multiple servers: first accessing calendar resources to verify date availability, then querying weather servers for climate conditions during the planned travel period, and finally using travel servers to search for flights and accommodations that match the specified criteria.

This multi-server approach enables sophisticated decision-making that considers multiple factors simultaneously. The AI can optimize flight selections based on weather patterns, suggest alternative dates when better deals are available, and automatically avoid scheduling conflicts by consulting calendar resources. Each server contributes specialized knowledge while the MCP protocol coordinates the overall workflow.

Complex Decision-Making Applications

Flight analysis represents another advanced use case where MCP enables sophisticated AI decision-making through the sampling capability. When evaluating complex travel options with multiple variables (price, timing, connections, airlines), the AI system can use MCP's sampling feature to request additional analysis from the host's language model.

This creates a collaborative decision-making process where the MCP server provides structured data about available flights, and the AI system performs comparative analysis using its reasoning capabilities. The server can request specific analytical tasks like "Compare these five flight options considering total travel time, cost, and connection quality" and receive structured responses that inform the final recommendations.

The sampling capability enables AI applications to leverage language model reasoning for server-side decision making while maintaining model independence. Servers don't need to embed specific AI models but can access the host application's AI capabilities through standardized requests. This architecture enables specialized servers to focus on domain expertise while accessing powerful reasoning capabilities when needed.

Industry-Specific Applications

Database analysis and business intelligence represent growing applications where MCP servers provide specialized query capabilities while AI applications handle natural language interaction and insight generation. A business intelligence MCP server might expose tools for complex database queries, resources containing schema information and historical data, and prompts for common analytical workflows.

Users can interact with these systems using natural language questions like "What were our best-performing products last quarter?" The AI system translates this into appropriate database queries, executes them through MCP tools, and synthesizes results into understandable insights. The MCP protocol handles the technical complexity while enabling business users to access sophisticated analytical capabilities without SQL knowledge.

Healthcare applications demonstrate MCP's potential for sensitive data integration. Medical record systems implementing MCP servers can provide controlled access to patient information, treatment protocols, and research data while maintaining strict privacy controls. AI applications can assist with diagnosis, treatment planning, and research insights while respecting regulatory requirements and professional standards.

These industry applications highlight MCP's flexibility for specialized domains while maintaining standardized integration patterns. Each implementation leverages the protocol's core capabilities while addressing domain-specific requirements for security, compliance, and functionality.

MCP Ecosystem and Community Resources

What is the MCP ecosystem? The Model Context Protocol ecosystem has experienced remarkable growth since its introduction, with active communities developing specialized servers and contributing to the protocol's evolution. Community analysis reveals 60K+ members in the r/mcp Reddit community and 21K+ members in r/modelcontextprotocol, demonstrating strong developer interest and engagement in the protocol's development.

The mcp servers list continues expanding with new implementations covering everything from cloud services to local development tools. Popular open source mcp servers include database connectors, filesystem access tools, and specialized integrations for services like GitHub, Slack, and AWS.

Available MCP Servers and Tools

The open-source MCP server ecosystem continues expanding with implementations across diverse domains and use cases. Official reference implementations provide starting points for common integration scenarios, including filesystem servers for document access, database servers for query capabilities, and API servers for external service integration.

GitHub repositories host the growing collection of mcp servers, with the official modelcontextprotocol organization maintaining reference implementations and community members contributing specialized servers. The awesome mcp servers collection curates high-quality implementations, providing developers with tested, documented servers for rapid integration. The mcp server github ecosystem includes templates and examples for building custom servers in multiple programming languages.

Development tools support the growing ecosystem, including the MCP Inspector for debugging server implementations, SDK documentation for multiple programming languages, and testing frameworks for validating protocol compliance. These tools reduce the barrier to entry for new server development while ensuring quality and interoperability across implementations.

Community Growth and Development Trends

Developer adoption patterns show increasing enterprise interest alongside continued open-source community growth. StackOverflow analysis reveals 112 questions tagged with MCP, indicating growing developer engagement and real-world implementation challenges being addressed through community support.

The community demonstrates strong collaborative patterns, with developers sharing server implementations, contributing to documentation, and providing support for newcomers. Active discussion threads address common implementation challenges, security considerations, and best practices for different use cases.

Emerging trends include specialization toward industry-specific servers, integration with existing development workflows, and focus on enterprise-grade security and reliability. The community has identified key areas for future development: improved authentication mechanisms, performance optimization techniques, and standardized deployment patterns for production environments.

Geographic distribution shows global adoption with particularly strong engagement from North American and European developer communities. This international participation contributes to diverse use cases and implementation patterns that strengthen the overall ecosystem.

Contributing to the MCP Ecosystem

New contributors can participate through multiple pathways: implementing servers for underserved domains, contributing to existing open-source projects, improving documentation and examples, or participating in community discussions about protocol evolution.

Server development represents the most direct contribution opportunity. Developers with expertise in specific domains (finance, healthcare, education, etc.) can create specialized servers that benefit the entire community. The official SDKs provide clear starting points, and community members actively support new server development through code reviews and implementation guidance.

Documentation and educational content creation addresses ongoing community needs. The community benefits from tutorials, implementation guides, best practice documentation, and real-world case studies that help newcomers understand MCP's capabilities and implementation patterns.

Protocol evolution participation enables experienced developers to influence MCP's future direction. Community feedback shapes new features, security enhancements, and performance improvements through GitHub discussions, RFC processes, and collaborative development initiatives.

The ecosystem's health depends on continued community contribution across all these areas, creating a positive cycle where increased participation leads to more capable tools, better documentation, and broader adoption that attracts additional contributors.

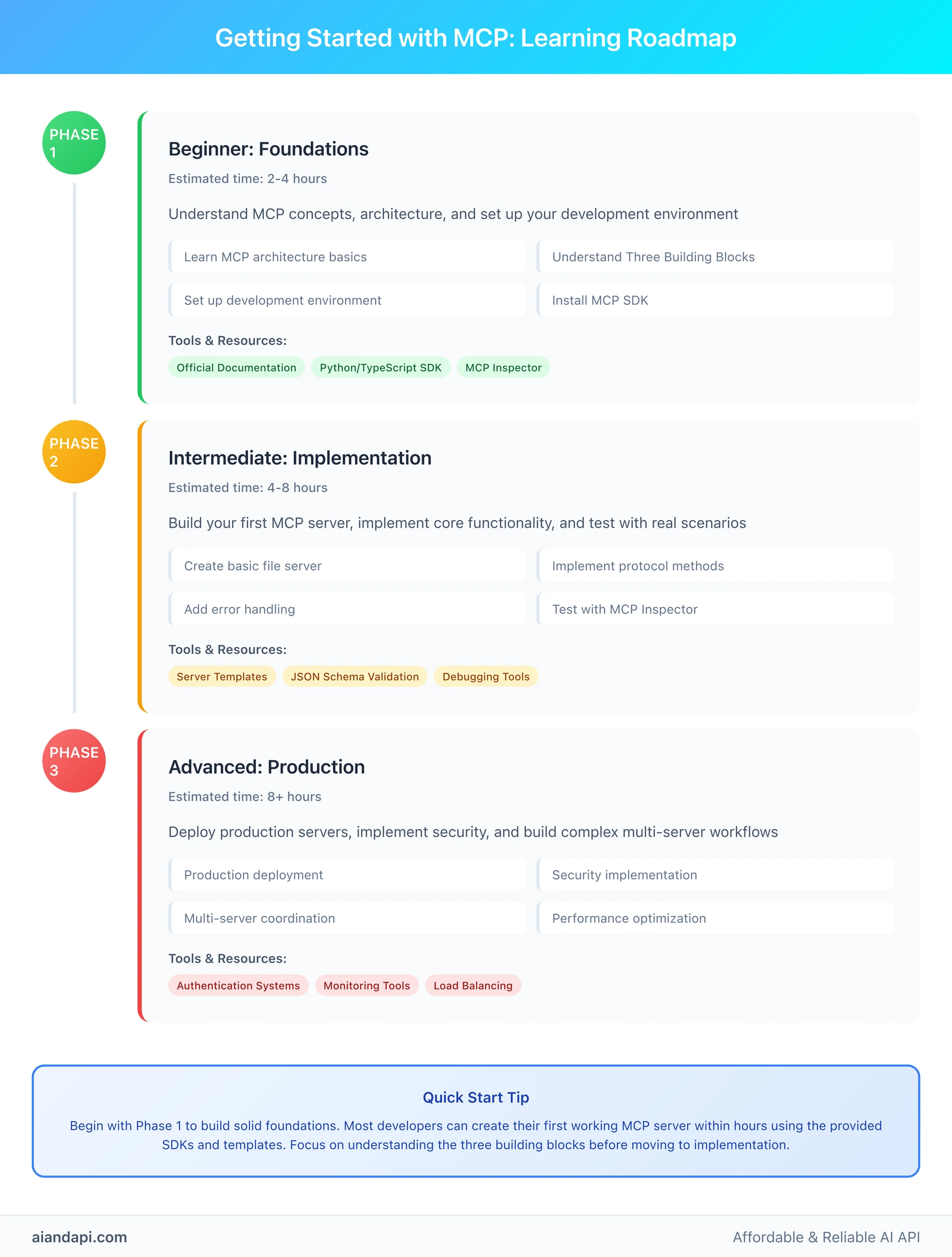

Getting Started: Your First MCP Implementation

How to get started with MCP? Beginning your MCP journey requires understanding the development environment setup and implementation pathway that best matches your technical background and use case requirements. The mcp protocol supports multiple programming languages through official SDKs, with comprehensive documentation guiding you from basic concepts to production deployment.

The mcp server tutorial path typically involves three steps: environment setup with the appropriate SDK, basic server implementation using official templates, and testing with the MCP Inspector tool. This approach enables developers to build functional mcp ai integrations within hours rather than days.

Prerequisites and Environment Setup

Development environment preparation begins with selecting the appropriate SDK for your preferred programming language. Official SDKs are available for Python, TypeScript/JavaScript, and other popular languages, each providing complete protocol implementation and helpful abstractions that simplify server development.

Python developers can install the MCP SDK through pip and immediately access comprehensive mcp server examples that demonstrate core functionality. The Python SDK includes built-in support for both stdio and HTTP transports, making it suitable for both local development and production deployments. TypeScript developers benefit from strong typing support that catches protocol errors during development and provides clear API guidance for building robust mcp servers.

Local development setup involves configuring your development environment for MCP server testing and debugging. The MCP Inspector tool provides essential debugging capabilities, allowing you to test server implementations, validate protocol compliance, and troubleshoot communication issues. This tool integrates with popular development environments and provides real-time protocol message inspection.

Authentication and security configuration depends on your deployment target. Local development typically uses stdio transport with minimal authentication, while production deployments require proper HTTP authentication, SSL/TLS configuration, and access control implementation. The SDKs provide examples for common authentication patterns including OAuth, API keys, and custom header authentication.

First Server Implementation Walkthrough

Your first mcp server implementation should focus on a simple, well-understood domain that demonstrates core protocol concepts without excessive complexity. A basic file server that exposes local documents as resources provides an excellent starting point for understanding MCP fundamentals and following mcp server tutorial best practices.

Begin by defining your server's capabilities during the initialization handshake. Declare support for resources if you're implementing file access, tools if you're providing executable functionality, or prompts if you're creating reusable templates. The capability negotiation ensures compatible communication with connecting clients and helps clients understand your server's functionality.

Implement the core protocol methods starting with discovery endpoints. The resources/list method should return an array of available files or directories, including metadata like MIME types and descriptions that help clients understand the content. Keep initial implementations simple - focus on protocol correctness rather than advanced features.

Resource reading implementation through resources/read should handle URI resolution, file access, and appropriate error handling for missing or inaccessible files. Return structured content with proper MIME types that clients can process effectively. Include appropriate security checks to prevent unauthorized file access.

Testing your implementation using the MCP Inspector validates protocol compliance and helps identify common implementation issues. The inspector provides detailed protocol message inspection, error reporting, and performance metrics that guide optimization efforts. Test both successful operations and error conditions to ensure robust behavior.

Gradually add complexity by implementing additional building blocks or advanced features like notifications, parameter completion, or authentication. Each addition should be thoroughly tested to maintain protocol compliance and ensure reliable operation with different client implementations.

The progression from basic to advanced functionality enables learning MCP concepts incrementally while building practical experience with real implementations. This approach builds confidence with protocol fundamentals before tackling more complex integration scenarios.

Frequently Asked Questions About Model Context Protocol

What is Model Context Protocol?

Model Context Protocol (MCP) is an open protocol developed by Anthropic that standardizes how AI applications connect to external data sources and tools. Unlike traditional API integrations that require custom code for each service, MCP provides a universal interface that works across different services using JSON-RPC 2.0 protocol. Think of MCP like a "USB-C port for AI applications" - it eliminates the need for custom integrations by providing standardized connectivity patterns.

The mcp protocol enables AI applications to access databases, filesystems, APIs, and other services through a single, consistent interface, dramatically reducing development complexity while opening access to a rapidly expanding ecosystem of community-built servers.

What is MCP and how does it work?

MCP works through a client-server architecture with three core components:

1. MCP Host

The AI application (like Claude Desktop or VS Code) that coordinates communication

2. MCP Client

Maintains one-to-one connections with MCP servers and handles protocol communication

3. MCP Server

Programs that expose specific capabilities through three building blocks:

- Tools (executable functions)

- Resources (data sources)

- Prompts (reusable templates)

The Four-Step Process

- Host application runs MCP client

- Client connects to MCP servers via JSON-RPC

- Servers expose tools and data sources

- LLMs access real-time information through established connections

What are the benefits of MCP protocol?

The MCP protocol delivers four core advantages:

1. Standardization

Universal protocol eliminates custom integration code for each service

2. Dynamic Discovery

AI applications automatically detect available tools without manual configuration

3. Persistent Communication

Supports ongoing conversations and stateful interactions between AI systems and servers

4. Ecosystem Growth

Network effects where each new MCP server benefits the entire community

Real-World Benefits

These advantages translate to:

- Faster development cycles

- Reduced maintenance overhead

- Improved scalability for AI applications connecting to multiple external services

How is MCP different from API?

MCP differs from traditional APIs in several key ways:

| Aspect | Traditional APIs | MCP Protocol |

|---|---|---|

| Integration | Custom code for each service | Universal protocol implementation |

| Discovery | Static, hardcoded configurations | Dynamic capability detection |

| Communication | Request-response patterns | Persistent, stateful connections |

| Maintenance | Service-specific updates required | Protocol compatibility ensures stability |

| Development | Exponential complexity growth | Linear complexity with additional servers |

Key Insight

The MCP architecture provides standardized patterns that work universally, while traditional APIs require bespoke implementations that multiply integration complexity.

What is MCP for LLM?

For large language models, the MCP protocol serves as a standardized bridge to external capabilities and real-time information.

MCP Capabilities for LLMs

MCP enables LLMs to:

Access Live Data

Connect to databases, APIs, and filesystems for current information

Execute Actions

Perform tasks through standardized tool interfaces with user approval

Maintain Context

Keep ongoing awareness of external system states and changes

Scale Integrations

Connect to multiple specialized services using the same protocol patterns

Transformation Impact

This transforms LLMs from static text processors into dynamic agents capable of interacting with the broader digital environment through secure, standardized interfaces.

Who developed Model Context Protocol?

The Model Context Protocol was developed by Anthropic and introduced on November 25, 2024. As the creators of Claude, Anthropic designed MCP as an open-source framework to address the fragmented landscape of AI application integrations. The protocol emerged from Anthropic's recognition that custom API integrations were creating maintenance burdens and limiting AI application development.

Release and Adoption

Anthropic released MCP with:

- Official SDKs for multiple programming languages

- Comprehensive documentation and guides

- Reference implementations to encourage community adoption

Growing Ecosystem

The Anthropic MCP ecosystem now includes:

- 60K+ community members contributing to development

- Hundreds of open-source server implementations across diverse domains

What are the limitations of Model Context Protocol?

While MCP offers significant advantages, current limitations include:

- Early Adoption Stage - Limited production deployments and evolving best practices

- Learning Curve - Developers need to understand new protocol patterns and architecture concepts

- Server Availability - Growing but still limited ecosystem of specialized servers for niche use cases

- Performance Overhead - Protocol abstraction may introduce latency compared to direct API calls

- Security Complexity - Proper authentication and permission management requires careful implementation

Most limitations reflect MCP's early stage rather than fundamental protocol issues, with active community development addressing these areas through improved tooling, documentation, and server implementations.

What is the MCP protocol?

The MCP protocol is the technical specification that defines how Model Context Protocol communication works. Built on JSON-RPC 2.0, the mcp protocol consists of:

Data Layer - Implements message exchange, capability negotiation, and core functionality including standardized methods like tools/list, tools/call, and resources/read

Transport Layer - Manages communication channels supporting stdio transport (local processes) and HTTP transport (remote servers) with authentication mechanisms

The protocol design enables the same message format to work across all transport mechanisms, whether connecting to local database servers or remote API services. This layered approach separates protocol semantics from transport details, ensuring consistent behavior while supporting diverse deployment scenarios.

Summary and Key Takeaways

Model Context Protocol represents a transformative advancement in AI application development, establishing the foundation for a new era of standardized AI integrations. This comprehensive guide has explored every aspect of the mcp protocol, from its fundamental concepts as "a USB-C port for AI applications" to sophisticated multi-server coordination scenarios that enable complex mcp ai workflows.

The core value of the mcp protocol lies in its standardization approach, which eliminates the fragmentation that has historically plagued AI development. Through its three building blocks - Tools for AI actions, Resources for context data, and Prompts for interaction templates - the anthropic mcp framework provides a complete solution for AI applications to access external capabilities while maintaining security, reliability, and user control.

Key takeaways include the mcp architecture's JSON-RPC 2.0 implementation that ensures reliable communication, its dynamic discovery capabilities that enable mcp ai applications to adapt to changing environments, and its growing ecosystem with 60K+ community members contributing specialized mcp servers and tools. The protocol's success in real-world implementations, from Visual Studio Code integrations to complex travel planning workflows, demonstrates its practical value for enterprise and individual developers alike.

As the AI industry continues evolving, the Model Context Protocol positions itself as the definitive standard for AI application integration. Its open protocol design, comprehensive security framework, and active community development ensure that the mcp ecosystem will continue growing and improving. Whether you're building your first mcp server or architecting complex multi-service AI applications, MCP provides the foundation for scalable, maintainable, and powerful AI solutions.

The future of AI application development lies in standardized, interoperable protocols that enable innovation while reducing complexity. The mcp protocol delivers on this promise today, offering developers immediate access to a growing ecosystem of mcp servers and capabilities while establishing patterns that will guide AI development for years to come. Start exploring MCP today by building your first mcp server tutorial project and join the community shaping the future of AI application integration.